Prerequisites

This lesson assumes that you want to connect to a Heidenhain controller using the DNC interface protocol with the Cybus Connectware. To understand the basic concepts of Connectware, please check out the Technical Overview lesson. To follow along with the example, you will also need a running instance of Connectware. If you don’t have that, learn How to install the Connectware. Additionally it is not required but will be useful to be familiar with the User Management Basics as well as the lesson How To Connect an MQTT Client.

Introduction

This article will teach you the integration of Heidenhain controllers. In more detail, the following topics are covered:

- Setup of the Cybus Heidenhain Agent

- Selecting the methods

- Creating the commissioning file

- Installing the service

- Verifying data in the Connectware Explorer

The commissioning files used in this lesson are made available in the Example Files Repository on GitHub.

About Heidenhain DNC

Heidenhain is a manufacturer of measurement and control technology which is widely used for CNC machines. Their controllers provide the Heidenhain DNC interface, also referred to as „option 18“, which enables vertical integration of devices and allows users to access data and functions of a system. The DNC protocol is based on Remote Procedure Calls (RPC), which means it carries out operations by calling methods on the target device. You can find a list of the available methods in the Cybus Docs.

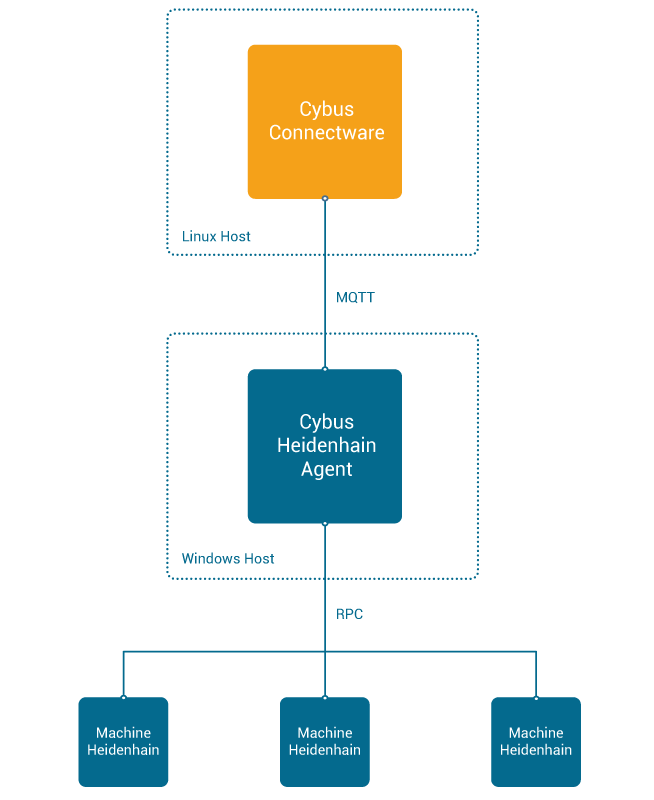

Cybus Heidenhain Agent

Utilizing Heidenhain DNC with Connectware requires the Cybus Heidenhain Agent running on a Windows machine or server on your network. This agent uses the Heidenhain RemoTools SDK to connect to one or more Heidenhain controllers and communicates to Connectware via MQTT. The Cybus Heidenhain Agent and required dependencies are provided to you by our Support Team.

Prerequisites

The host of the Cybus Heidenhain Agent must meet the following requirements:

- Windows 10 or Windows Server 2019

- Installed Heidenhain SDK

- Windows host must be able to reach controllers (or emulators) via network

- iTNC 530 / TNC 426:

- Windows Host -> Heidenhain Controller: ICMP (Ping)

- Windows Host -> Heidenhain Controller: 19000/TCP

- TNC 640:

- Windows Host -> Heidenhain Controller: ICMP (Ping)

- Windows Host -> Heidenhain Controller: 19003/TCP

- Heidenhain Controller -> Agent-Host: 19010-19015/TCP

- iTNC 530 / TNC 426:

- Windows host must be able to reach Connectware instance via network

- Windows Host -> Connectware: 8883/TCP

Installation

- Start the Cybus Heidenhain Agent installer and follow the installation instructions

- Input Connectware host IP address

After successful installation, a windows system service with name „Cybus Heidenhain Agent“ is up and running. It is already configured to automatically start on windows restart and to restart in case of crash. You can always inspect the status under Windows Services and log messages in the Windows Event Viewer.

Post-Installation Steps

Go to the Connectware Admin User Interface (Admin UI) and log in.

Agent Role (once)

The agent needs to be able to communicate with Connectware by publishing and subscribing on certain MQTT topics, thus we need to grant this permission. Permissions are bundled within Connectware roles (see User Management Basics). Create the following role:

- Name: heidenhain-agent

- Data permission: edge.cybus/# (Read and Write)

Agent Onboarding (per Agent)

- Use the Client Registry (User Management > Client Registry) to onboard the agent (for details see How To Connect an MQTT Client)

- Enable self registration

- Wait for agent to appear

- Check if agent is correct

- Grant access to agent and assign the heidenhain-agent role

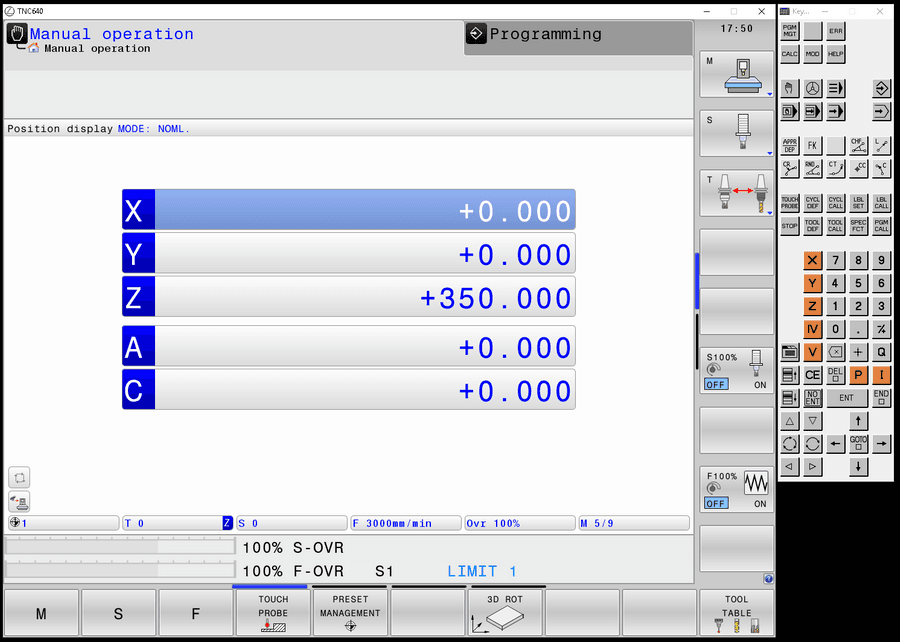

Installing and Starting the Heidenhain Emulator

For this lesson we do not have a machine with a TNC 640 controller available so we will utilize the TNC 640 emulator running on a Windows machine.

Download the latest version of TNC 640 Programming Station from heidenhain.com and install it to the same machine as the agent or another Windows machine of known IP address in the same network. After the installation you can start the program with the desktop shortcut TNC 640. Once the program is started and you see the tab Power interrupted you can press the CE button on the Keypad to enter the manual mode. The emulator should now also be available on your network.

Selecting the Methods

The most essential information we need to specify the commissioning file for our Heidenhain DNC application is the set of methods of the controller we want to make available with our Connectware. We could just take the whole list from the Cybus Docs and integrate all of the functions to be available on Connectware but to not lose focus in this lesson we will pick a small set of them for demonstration purposes.

We will integrate the following methods:

- getState

- getPlcData

- getToolTableRow

- transmitFile

- onToolTableChanged

Writing the Commissioning File

The Commissioning File is a set of parameters which describes the resources that are necessary to collect and provide all the data for our application. It contains information about all connections, data endpoints and mappings and is read by Connectware. To understand the file’s anatomy in detail, please consult the Cybus Docs.

To get started, open a text editor and create a new file, e.g. heidenhain-example-commissioning-file.yml. The commissioning file is in the YAML format, perfectly readable for humans and machines! We will now go through the process of defining the required sections for this example:

- Description

- Metadata

- Parameters

- Resources

Description and Metadata

These sections contain more general information about the commissioning file. You can give a short description and add a stack of metadata. Regarding the metadata, only the name is required while the rest is optional. We will just use the following set of information for this lesson:

description: >

Heidenhain DNC Example Commissioning File

Cybus Learn - How to connect a machine via Heidenhain DNC interface

https://learn.cybus.io/lessons/how-to-connect-heidenhain-dnc/

metadata:

name: Heidenhain DNC Example

version: 1.0.0

icon: https://www.cybus.io/wp-content/uploads/2019/03/Cybus-logo-Claim-lang.svg

provider: Cybus GmbH

homepage: https://www.cybus.io

Code-Sprache: YAML (yaml)Parameters

Parameters allow the user to prepare commissioning files for multiple use cases by referring to them from within the commissioning file. Every time a commissioning file is applied or a service reconfigured in Connectware, the user is asked to enter custom values for the parameters or to confirm the default values.

parameters:

agentId:

type: string

description: Agent Identification (Cybus Heidenhain Agent)

default: <yourAgentId>

machineIP:

type: string

description: IP Address of the machine

default: <yourMachineAddress>

cncType:

type: string

default: tnc640

description: >-

Type of the machine control (DNC Type). Allowed values: tnc640, itnc530,

itnc426.

allowedValues:

- tnc640

- itnc530

- tnc426

Code-Sprache: YAML (yaml)The parameters we define here could vary from setup to setup, so it is just good to make them configurable. The agentId is the name of the agent’s user in Connectware, which was defined during client registration. The machineIP in our example is the address of the Windows machine running the TNC 640 emulator or would be the address of the machine tool you want to connect to. As parameter cncType we define the type of controller we use and additionally we define the currently supported controller types as allowedValues for this parameter.

Resources

In the resources section we declare every resource that is needed for our application. For details about the different resource types and available protocols, please consult the Cybus Docs.

Connection

The first resource we need is a connection to the Heidenhain controller. The connection is defined by its type and its type-specific properties. In the case of Cybus::Connection we declare which protocol and connection parameters we want to use. For the definition of our connection we reference the earlier declared parameters agentId, machineIP and cncType by using !ref.

resources:

heidenhainConnection:

type: 'Cybus::Connection'

properties:

protocol: Heidenhain

connection:

agent: !ref agentId

ipAddress: !ref machineIP

cncType: !ref cncType

plcPassword: <password>

usrPassword: <password>

tablePassword: <password>

sysPassword: <password>

Code-Sprache: YAML (yaml)The access to your TNC 640 controller is restricted by four preconfigured passwords. If you need help to find out the necessary passwords, feel free to contact our Support Team. For ITNC 530 and TNC 426 no password is required.

Endpoints

The next resources needed are the endpoints which will provide or accept data. All endpoints have some properties in common, namely the protocol defined as Heidenhain, the connection which is referenced to the previously defined connection resource using !ref and the optional topic defining on which MQTT topic the result will be published. In the default case the full endpoint topic will expand to services/<serviceId>/<topic>. For more information on that, please consult the Cybus Docs.

The endpoints will make use of the methods we selected earlier. Those methods are all a bit different so let’s take a look at each of the endpoint definitions.

getStatePolling:

type: 'Cybus::Endpoint'

properties:

protocol: Heidenhain

connection: !ref heidenhainConnection

topic: getState

subscribe:

method: getState

type: poll

pollInterval: 5000

params: []

Code-Sprache: YAML (yaml)The first endpoint makes use of the method: getState, which requests the current machine state. The result should be published on the topic: getState. This endpoint is defined with the property subscribe, which in the context of a Heidenhain connection means that it will request the state in frequency of the defined pollInterval. This is also known as polling and brings us to the definition of the type which therefore is poll.

getState:

type: 'Cybus::Endpoint'

properties:

protocol: Heidenhain

connection: !ref heidenhainConnection

topic: getState

read:

method: getState

Code-Sprache: YAML (yaml)But we could also make use of the method getState by requesting the state only once when it is called. The definition of this endpoint differs from the previous in the property read instead of subscribe. To utilize this endpoint and call the method, you need to publish an MQTT message to the topic services/<serviceId>/<topic>/req. The result of the method will be published on the topic services/<serviceId>/<topic>/res. <topic> has to be replaced with the topic we defined for this endpoint, namely getState. The serviceId will be defined during the installation of the service and can be taken from the services list in the Connectware Admin UI.

getToolTableRow:

type: 'Cybus::Endpoint'

properties:

protocol: Heidenhain

connection: !ref heidenhainConnection

topic: getToolTableRow

read:

method: getToolTableRow

Code-Sprache: YAML (yaml)The previously used method getState did not expect any arguments so we could just call it by issuing an empty message on the req topic. The method getToolTableRow is used to request a specific row of the tool table. To specify which row should be requested, we need to supply the toolId. We will look at an example of this method call in the last section of the article.

getPlcData:

type: 'Cybus::Endpoint'

properties:

protocol: Heidenhain

connection: !ref heidenhainConnection

topic: getPlcData

read:

method: getPlcData

Code-Sprache: YAML (yaml)Using the method getPlcData allows us to request data stored on any memory address of the controller. The arguments that must be handed over are the memoryType and the memoryAddress.

transmitFile:

type: 'Cybus::Endpoint'

properties:

protocol: Heidenhain

connection: !ref heidenhainConnection

topic: transmitFile

read:

method: transmitFile

Code-Sprache: YAML (yaml)The method transmitFile allows us to transmit a file in the form of a base64-encoded buffer to a destination path on the Heidenhain controller. It expects two arguments: the string fileBuffer and another string destinationPath.

onToolTableChanged:

type: 'Cybus::Endpoint'

properties:

protocol: Heidenhain

connection: !ref heidenhainConnection

topic: notify/onToolTableChanged

subscribe:

type: notify

method: onToolTableChanged

Code-Sprache: YAML (yaml)The last endpoint we define calls the method onToolTableChanged. This is an event method which will send a notification in case of a changed tool table. For this we have to use the property subscribe along with the type notify. This means that we are not polling the method in this context but subscribe to it and wait for a notification on the specified topic. We could trigger a notification by modifying the tool table in the TNC emulator.

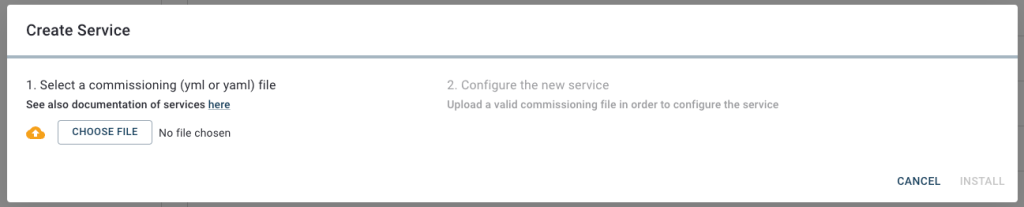

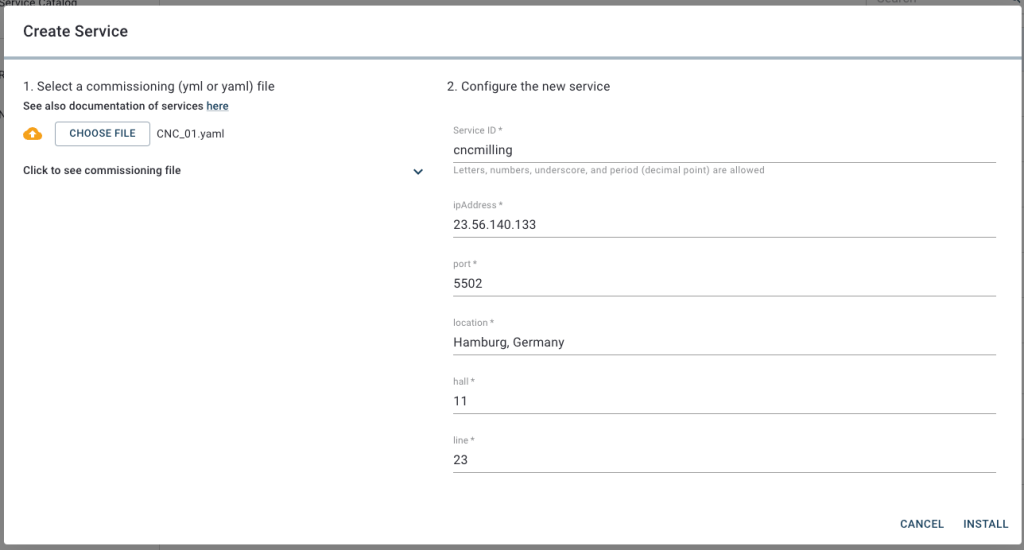

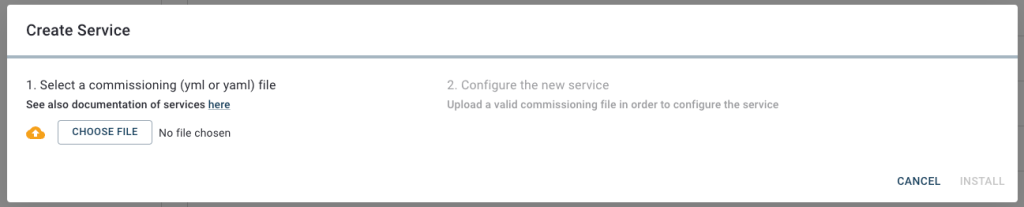

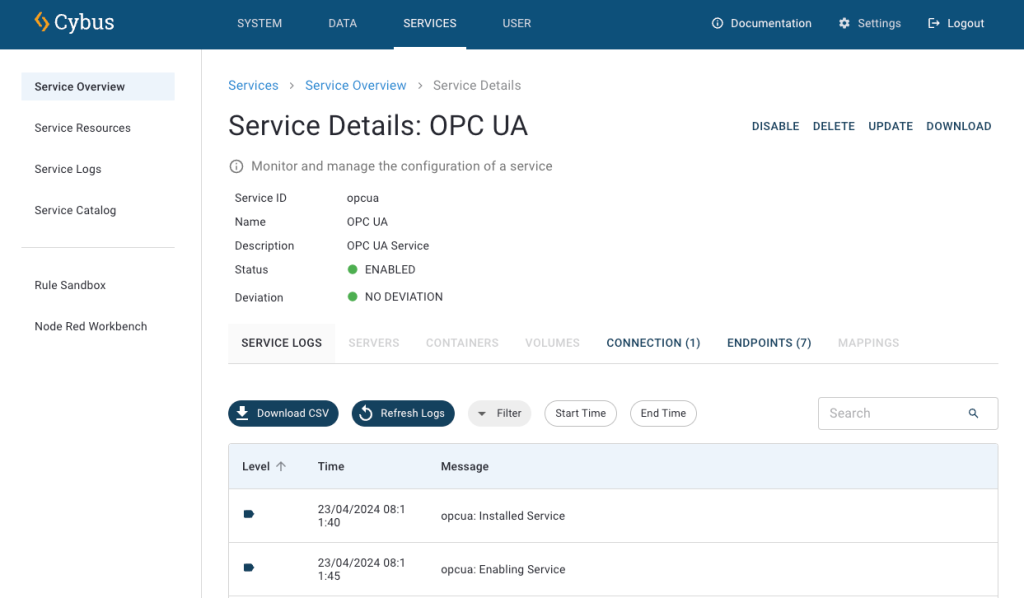

Installing the Commissioning File

You now have the commissioning file ready for installation. Head over to the Services tab in the Connectware Admin UI and hit the (+) button to select and upload the commissioning file. You will be asked to specify values for each member of the section parameters or confirm the default values. With a proper written commissioning file, the confirmation of this dialog will result in the installation of a service, which manages all the resources we just defined: The connection to the Heidenhain controller and the endpoints collecting data from the controller. After enabling this service you are good to go on and see if everything works out!

How do all the components we installed and defined work together?

The Heidenhain agent running on the Windows machine tries to connect to Connectware at the IP address that was defined during its installation, as soon as it is started. We recognized these connection attempts when we opened the Connectware client registry and accepted the request of the Heidenhain agent with the name heidenhain-<windows-pc-hostname>. As a result a user with this name was created in Connectware. We manually assigned this user the role heidenhainagent and through that granted the permission to access the MQTT topic for data exchange.

After the installation of the service in Connectware it tries to establish the Heidenhain connection we declared in the resources section of the commissioning file. There we have defined the name of the Heidenhain agent and the IP address of the Heidenhain controller to connect to, or in our case of the emulator, which runs on the same machine as the agent. (Working with a real machine controller that would obviously not be the case.) As soon as the connection to the Heidenhain controller is established the service enables the endpoints which rely on the method calls issued by the agent to the Heidenhain controller via RPC. To address multiple Heidenhain controllers we could utilize the same agent but need to specify separate connection resources for each of them.

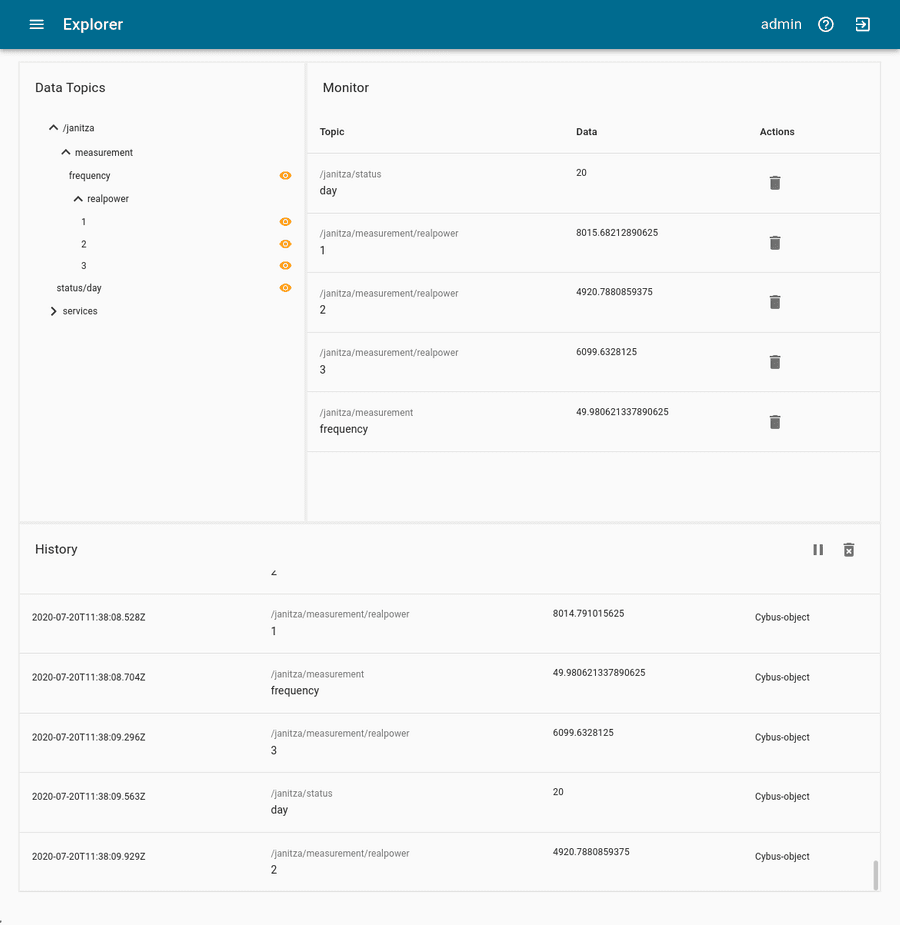

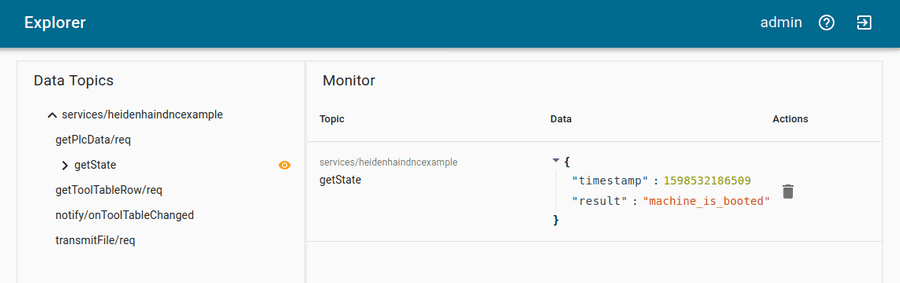

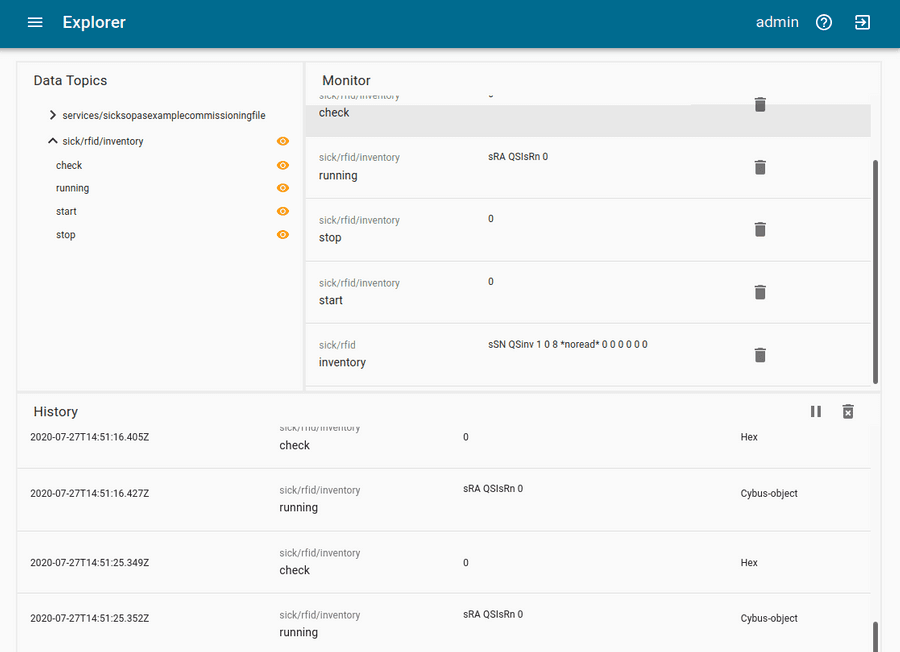

Verifying the Connection

Now that we have a connection established between the Heidenhain controller and Connectware, we can go to the Explorer tab of the Admin UI, where we see a tree structure of our newly created data points. Since we subscribed to the method getState, we should already see data being polled and published on this topic. Find the topic getState under services/heidenhaindncexample.

On MQTT topics the data is provided in JSON format. To utilize a method that expects arguments you would issue a request by publishing a message on the belonging req topic with the arguments as payload. For example to use the endpoint getToolTableRow you could publish the following message to request the tool information of tool table ID 42:

{

"id": "1",

"params": [ "42" ]

}

Code-Sprache: YAML (yaml)The payload must be a valid JSON object and can contain two properties:

id: (optional) User-defined correlation ID which can be used to identify the response. If this property was given, its value will be returned in the return messageparams: Array of parameters required for the used method. If the method requires no parameters, this property is optional, too.

The arguments required for each call are listed along with the methods in the Cybus Docs.

The answer you would receive to this method call could look as follows:

{

"timestamp":1598534586513,

"result":

{

"CUR_TIME":"0",

"DL":"+0",

"DR":"+0",

"DR2":"+0",

"L":"+90",

"NAME":"MILL_D24_FINISH",

"PLC":"%00000000",

"PTYP":"0",

"R":"+12",

"R2":"+0",

"T":"32",

"TIME1":"0",

"TIME2":"0"

},

"id":"1"

}

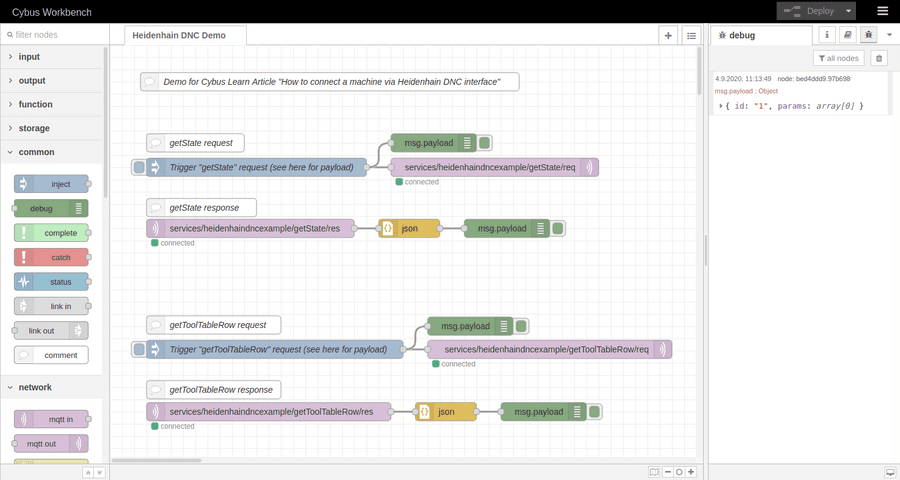

Code-Sprache: YAML (yaml)To learn how you can easily test and interact with MQTT topics like in this example, check out the article How to connect MQTT clients. Utilizing the Workbench you could simply hit Import from the menu in the upper right corner and import the nodered-flow.json from the Example Repository to add the test flow shown below. Add your MQTT credentials to the purple subscribe and publish nodes, then trigger requests.

[{"id":"cba40915.308b38","type":"tab","label":"Heidenhain DNC Demo","disabled":false,"info":""},{"id":"bc623e05.ae0f7","type":"inject","z":"cba40915.308b38","name":"Trigger "getState" request (see here for payload)","props":[{"p":"payload"},{"p":"topic","vt":"str"}],"repeat":"","crontab":"","once":false,"onceDelay":0.1,"topic":"","payload":"{"id":"1","params":[]}","payloadType":"json","x":240,"y":200,"wires":[["aff3fdcf.d275d","9fd44184.a11d1"]]},{"id":"f450d307.396ee","type":"comment","z":"cba40915.308b38","name":"Demo for Cybus Learn Article "How to connect a machine via Heidenhain DNC interface"","info":"","x":360,"y":60,"wires":[]},{"id":"aff3fdcf.d275d","type":"mqtt out","z":"cba40915.308b38","name":"","topic":"services/heidenhaindncexample/getState/req","qos":"0","retain":"false","broker":"b7832f1d.e9d4e","x":630,"y":200,"wires":[]},{"id":"ddec5b0c.c0c418","type":"mqtt in","z":"cba40915.308b38","name":"","topic":"services/heidenhaindncexample/getState/res","qos":"0","datatype":"auto","broker":"b7832f1d.e9d4e","x":230,"y":300,"wires":[["e1df9994.c3edc8"]]},{"id":"21f886a8.ac3a2a","type":"debug","z":"cba40915.308b38","name":"","active":true,"tosidebar":true,"console":false,"tostatus":false,"complete":"false","statusVal":"","statusType":"auto","x":650,"y":300,"wires":[]},{"id":"dc2c1840.954bb8","type":"comment","z":"cba40915.308b38","name":"getState request","info":"","x":140,"y":160,"wires":[]},{"id":"668e0f37.4f5","type":"comment","z":"cba40915.308b38","name":"getState response","info":"","x":150,"y":266,"wires":[]},{"id":"ec1150ad.7a23d","type":"inject","z":"cba40915.308b38","name":"Trigger "getToolTableRow" request (see here for payload)","props":[{"p":"payload"},{"p":"topic","vt":"str"}],"repeat":"","crontab":"","once":false,"onceDelay":0.1,"topic":"","payload":"{"id":"2","params":["42"]}","payloadType":"json","x":270,"y":498,"wires":[["d317feb3.3eb0b","4c3ab86e.f653b8"]]},{"id":"d317feb3.3eb0b","type":"mqtt out","z":"cba40915.308b38","name":"","topic":"services/heidenhaindncexample/getToolTableRow/req","qos":"0","retain":"false","broker":"b7832f1d.e9d4e","x":720,"y":498,"wires":[]},{"id":"5a2f9aca.f82724","type":"mqtt in","z":"cba40915.308b38","name":"","topic":"services/heidenhaindncexample/getToolTableRow/res","qos":"0","datatype":"auto","broker":"b7832f1d.e9d4e","x":260,"y":600,"wires":[["3ad07f7c.3bf85"]]},{"id":"b888e063.a8f84","type":"debug","z":"cba40915.308b38","name":"","active":true,"tosidebar":true,"console":false,"tostatus":false,"complete":"false","statusVal":"","statusType":"auto","x":710,"y":600,"wires":[]},{"id":"65db090f.922aa8","type":"comment","z":"cba40915.308b38","name":"getToolTableRow request","info":"","x":170,"y":458,"wires":[]},{"id":"23d593fd.e852ac","type":"comment","z":"cba40915.308b38","name":"getToolTableRow response","info":"","x":170,"y":564,"wires":[]},{"id":"3ad07f7c.3bf85","type":"json","z":"cba40915.308b38","name":"","property":"payload","action":"","pretty":false,"x":550,"y":600,"wires":[["b888e063.a8f84"]]},{"id":"e1df9994.c3edc8","type":"json","z":"cba40915.308b38","name":"","property":"payload","action":"","pretty":false,"x":490,"y":300,"wires":[["21f886a8.ac3a2a"]]},{"id":"9fd44184.a11d1","type":"debug","z":"cba40915.308b38","name":"","active":true,"tosidebar":true,"console":false,"tostatus":false,"complete":"false","statusVal":"","statusType":"auto","x":530,"y":160,"wires":[]},{"id":"4c3ab86e.f653b8","type":"debug","z":"cba40915.308b38","name":"","active":true,"tosidebar":true,"console":false,"tostatus":false,"complete":"false","statusVal":"","statusType":"auto","x":590,"y":460,"wires":[]},{"id":"b7832f1d.e9d4e","type":"mqtt-broker","z":"","name":"Connectware","broker":"connectware","port":"1883","clientid":"","usetls":false,"compatmode":false,"keepalive":"60","cleansession":true,"birthTopic":"","birthQos":"0","birthPayload":"","closeTopic":"","closeQos":"0","closePayload":"","willTopic":"","willQos":"0","willPayload":""}]

Code-Sprache: YAML (yaml)

Summary

In this article we went through the process of setting up a connection between a Heidenhain controller and the Cybus Connectware via the Heidenhain DNC interface. This required the Cybus Heidenhain agent to translate between Connectware and Heidenhain DNC by making use of the RemoTools SDK. We wrote the commissioning file that set up the Connectware service which connected to an emulated instance of a TNC 640 and requested and received information about the tool with ID 42. This or any other data from the Heidenhain DNC interface could now securely be vertically integrated along with data from any other interface through the unified API of Connectware.

Where to Go from Here

Connectware offers powerful features to build and deploy applications for gathering, filtering, forwarding, monitoring, displaying, buffering, and all kinds of processing data… why not build a dashboard for instance? For guides check out more of Cybus Learn.

Introduction

MQTT as an open network protocol and OPC UA as an industry standard for data exchange are the two most common players in the IIoT sphere. Often, MQTT (Message Queuing Telemetry Transport) is used to connect various applications and systems, while OPC UA (Open Platform Communications Unified Architecture) is used to connect machines. Additionally, there are also applications and systems that support OPC UA, just as there are machines or devices that support MQTT. Therefore, when it comes to providing communication between multiple machines/devices and applications that support different protocols, a couple of questions might arise. First, how to bridge the gap between the two protocols, and second, how to do it in an efficient, sustainable, secure and extensible way.

This article discusses the main aspects of MQTT and OPC UA and illustrates how these protocols can be combined for IIoT solutions. The information presented here would thus be useful for IIoT architects.

MQTT and OPC UA: Origins and Characteristics

Both protocols are the most supported and most utilized in the IIoT. MQTT originated in the IT sphere and is supported by major IoT cloud providers, such as Azure, AWS, Google, but also by players specialized in industrial use cases, e.g. Adamos, Mindsphere, Bosch IoT, to name a few. The idea behind MQTT was to invent a very simple yet highly reliable protocol that can be used in various scenarios (for more information on MQTT, see MQTT Basics). OPC UA, on the contrary, was created by an industry consortium to boost interoperability between machines of different manufacturers. As MQTT, this protocol covers core aspects of security (authentication, authorization and encryption of the data) and, besides, meets all essential industrial security standards.

The Nature of IIoT Use Cases

IIoT use cases are complex, because they bring together two distinct environments – Information Technology (IT) and Operational Technology (OT). Traditionally, the IT and OT worlds were separated from each other, had different needs and thus developed very different practices. One of such dissimilarities is the dependence on different communication protocols. The IT world is primarily influenced by higher level applications, web technology and server infrastructure, so the adoption of MQTT as an alternative to HTTP is on the rise there. At the same time, in the OT world, OPC UA is the preferable choice due to its ability of providing a perfectly described interface to industrial equipment.

Today, however, the IT and OT worlds gradually converge as the machine data generated on the shopfloor (OT) is needed for IIoT use cases such as predictive maintenance or optimization services that run in specialized IT applications and often in the cloud. Companies can therefore benefit from combining elements from both fields. For example, speaking of communication protocols, they can use MQTT and OPC UA along with each other. A company can choose what suits well for its use case’s endpoint and then bridge the protocols accordingly. If used properly, the combination of both protocols ensures greatest performance and flexibility.

Bringing MQTT and OPC UA Together

As already mentioned above, applications usually rely on MQTT and machines on OPC UA. However, it is not always that straightforward. Equipment may also speak MQTT and MES systems may support OPC UA. Some equipment and systems may even support both protocols. On top of that, there are also numerous other protocols apart from MQTT and OPC UA. All this adds more dimensions to the challenge of using data in the factory.

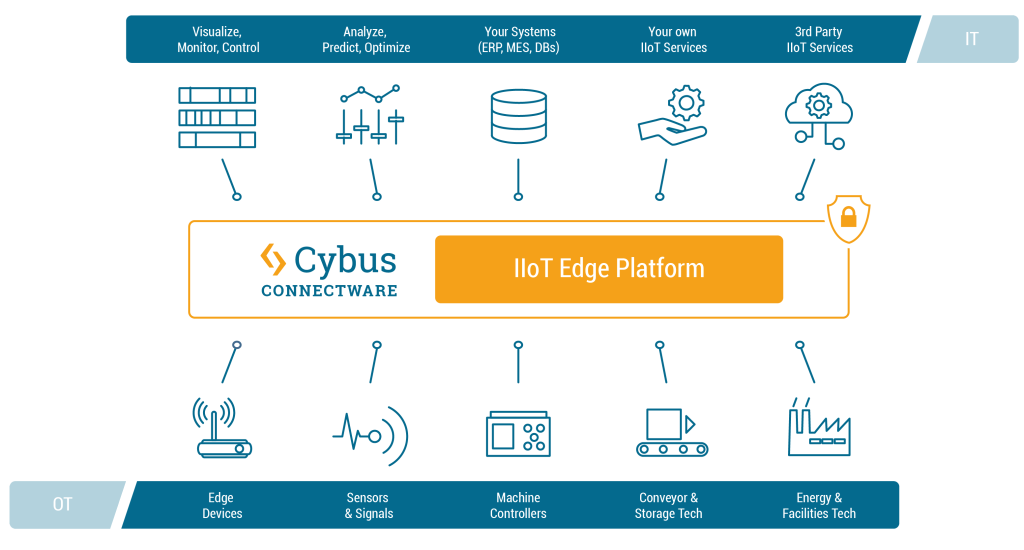

This IIoT challenge can, however, be solved with the help of middleware. The middleware closes the gap between the IT and OT levels, it enables and optimises their interaction. The Cybus Connectware is such a middleware.

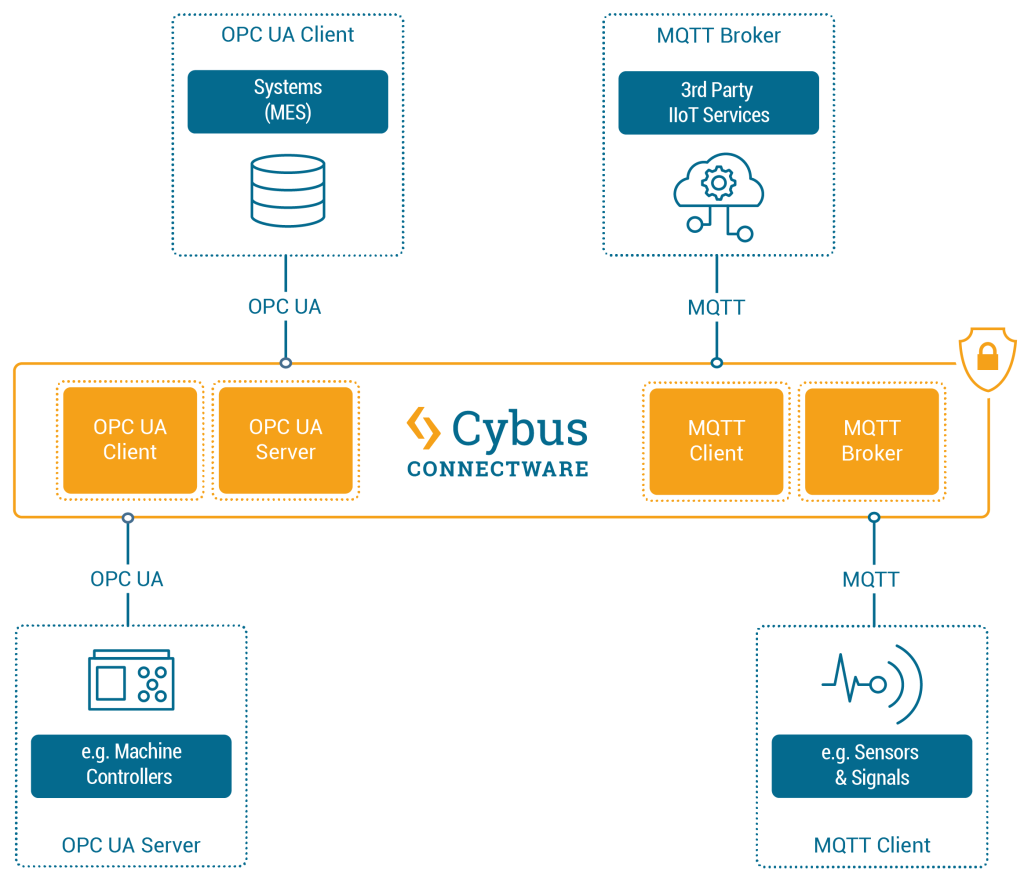

The Cybus Connectware supports a broad variety of protocols – including MQTT and OPC UA – and thus makes it possible to connect nearly any sort of IT application with nearly any sort of OT equipment. In the case of OPC UA and MQTT, the bridging of two protocols is achieved through connecting four parties: OPC UA Client, OPC UA Server, MQTT Client and MQTT Broker. The graphic below illustrates how the Cybus Connectware incorporates these four parties.

On the machines layer, different equipment can be connected to Connectware. For example, if a device such as a CNC controller (e.g. Siemens SINUMERIK) that uses OPC UA should be connected, then Connectware will serve as the OPC UA Client and the controller as the OPC UA Server. While connecting a device that supports MQTT (e.g. a retrofit sensor), Connectware will act as the MQTT broker, and the sensor will be the MQTT client.

Likewise, various applications can be connected to Connectware on the applications layer. In case of connecting services that support MQTT (e.g. Azure IoT Hub or AWS IoT / Greengrass), Connectware will act as the MQTT client, while those services will act as MQTT brokers. If connecting systems that support OPC UA (e.g. MES), Connectware will play the role of the OPC UA Server, while the systems will act as OPC UA clients.

The question may arise as to why not connect applications or systems that support a specific protocol directly to devices that support the same protocol, e.g. a SINUMERIK machine controller to a MES (which both “speak” OPC UA), or a retrofit sensor to the Azure IoT Hub (which both can communicate via MQTT)? Although this is theoretically possible, in practice it comes with fundamental disadvantages that can quickly become costly problems. A tightly coupled system like this requires far more effort as well as in depth protocol and programming skills. Such a system is then cumbersome to administer and not scalable. Most importantly, it lacks agility when introducing changes such as adding new data sources, services or applications. Thus a “pragmatic” 1:1 connectivity approach actually slows down the IIoT responsibles’ ability for business enablement where it is really needed to accelerate.

At this point, it is worth moving from the very detailed example of MQTT and OPC UA to a broader picture, because IIoT is a topic full of diversity and dynamics.

In contrast to the 1:1 connectivity approach, the Connectware IIoT Edge Platform enables (m)any-to-(m)any connectivity between pretty much any OT and IT data endpoints. From a strategic point of view, Connectware, acting as a “technology-neutral layer”, provides limitless compatibility in the IIoT ecosystem while maintaining convenient independence from powerful providers and platforms. It provides a unified, standardised and systematic environment that is made to fit expert users’ preferences. On this basis, IIoT responsibles can leverage key tactical benefits such as data governance, workflow automation and advanced security. You can read more about these aspects and dive into more operational capabilities in related articles.

Related Links

- How to connect to an OPC UA server

- How to set up the integrated Connectware OPC UA server

- How to connect an MQTT client to publish and subscribe data

- Connectware & Azure IoT Hub Integration

- Connectware & AWS IoT (Greengrass) Integration

Prerequisites

This lesson assumes that you want to integrate a SICK sensor device via SOPAS protocol with the Cybus Connectware. To understand the basic concepts of the Connectware, please check out the Technical Overview lesson.

To follow along with the example, you will also need a running instance of the Connectware. If you don’t have that, learn How to install the Connectware.

Although we focus on SOPAS here, we will ultimately access all data via MQTT, so you should also be familiar with it. If in doubt, head over to our MQTT Basics lesson. When coming to the topic of the Rule Engine at the end it can be useful to have basic knowledge of JSON and JSONATA.

Introduction

This article will teach you the integration of a SICK sensor device. In more detail, the following topics are covered:

- Identifying SOPAS commands

- Creating the Commissioning File

- Specifying MQTT mappings

- Establishing a connection via the Connectware Admin UI

- Verifying data in the Connectware Explorer

- Utilizing the Rule Engine

The Commissioning Files used in this lesson are made available in the Example Files Repository on GitHub.

Example Setup for This Lesson

Our setup for this lesson consists of a SICK RFU620 RFID reading device. RFID stands for „Radio Frequency Identification“ and enables reading from (and writing to) small RFID tags using radio frequency. Our SICK RFU620 is connected to an Ethernet network on a static IP address in this lesson referred to as „myIPaddress“. We also have the Connectware running in the same network.

About the SOPAS Protocol

The protocol used to communicate with SICK sensors is the SOPAS command language which utilizes command strings (telegrams) and comes in two protocol formats: CoLa A (Command Language A) with ASCII telegram format, and CoLa B with binary telegram format, not covered here. Often, the terms SOPAS and CoLa are used interchangeably, although strictly speaking we will send the SOPAS commands over the CoLa A protocol format. In this lesson we use the CoLa A format only, as this is supported by our example sensor RFU620. (Some SICK sensors only support CoLa A, others only CoLa B, and yet others support both.)

The SICK configuration software SOPAS ET also utilizes the SOPAS protocol to change settings of a device and retrieve data. The telegrams used for the communication can be monitored with the SOPAS ET’s integrated terminal emulator. Additional documentation with telegram listing and description of your device can be obtained from SICK either on their website or on request.

Identifying SOPAS Commands

For the integration we will need three pieces of information about the SOPAS commands we want to utilize:

1) Interface type: This part can be a bit tricky because the terminology used in device documentations may vary. The telegram listing of your device will probably group telegrams in events, methods and variables but sometimes you won’t find the term „variable“ but only the descriptions „Read“/“Write“.

2) Command name: Every event, method or variable is addressed using a unique string

3) Parameters: In case of variable writing or method calling some parameters may be required

The three interface types mainly have the following purposes:

- Events can be subscribed to and will provide asynchronous messages

- Methods can be called and will be executed by the device

- Variables can be read or written, for example to adjust the configuration of the device

The telegram listing from your device’s documentation is the most important source of this information. But for getting a hint of the structure of telegrams, we will take a short look at it.

For example, a command string for the RFU620 that we monitored with SOPAS ET’s integrated terminal emulator, could look like this:

sMN TAreadTagData +0 0 0 0 0 1

Code-Sprache: YAML (yaml)The first parameter in this string is the command type which in case of a request can be of the following for us relevant values:

| Value | command type | interface type |

|---|---|---|

| sRN | Read | variable |

| sWN | Write | variable |

| sMN | Method call | method |

| sEN | Event subscription | event |

The command type is sMN (where M stands for „method call“, and N for the naming scheme „by name“ as opposed to „by index“). This command name TAreadTagData enables us to read data from an RFID tag. Following the command name there are several space-separated parameters for the method call, for example the ID of the tag to read from. In this case we could extract the name TAreadTagData and the type method from the command string for our Commissioning File but yet don’t know the meaning of each parameter so we still have to consult the device’s telegram listing.

For this lesson we have identified the following commands of our RFID sensor:

| Name | Type | Parameters | Description |

|---------------|----------|-------------|-----------------------|

| QSinv | event | - | Inventory |

| MIStartIn | method | - | Start inventory |

| MIStopIn | method | - | Stop inventory |

| QSIsRn | variable | - | Inventory running |

| HMISetFbLight | method | color, mode | Switch feedback light |

Code-Sprache: YAML (yaml)Writing the Commissioning File

The Commissioning File contains all connection and mapping details and is read by the Connectware. To understand the file’s anatomy in detail, please consult the Reference docs. To get started, open a text editor and create a new file, e.g. sopas-example-commissioning-file.yml. The Commissioning File is in the YAML format, perfectly readable for human and machine! We will now go through the process of defining the required sections for this example:

- Description

- Metadata

- Parameters

- Resources

Description and Metadata

These sections contain more general information about the commissioning file. You can give a short description and add a stack of metadata. Regarding the metadata only the name is required while the rest is optional. We will just use the following set of information for this lesson:

description: >

SICK SOPAS Example Commissioning File for RFU620

Cybus Learn - How to connect and use a SICK RFID sensor via SOPAS

https://learn.cybus.io/lessons/how-to-connect-and-use-a-sick-rfid-sensor/

metadata:

name: SICK SOPAS Example

version: 1.0.0

icon: https://www.cybus.io/wp-content/uploads/2019/03/Cybus-logo-Claim-lang.svg

provider: cybus

homepage: https://www.cybus.io

Code-Sprache: YAML (yaml)Parameters

Parameters allow the user to customize Commissioning Files for multiple use cases by referring to them from within the Commissioning File. Each time a Commissioning File is applied or reconfigured in the Connectware, the user is asked to enter custom values for the parameters or to confirm the default values.

parameters:

IP_Address:

description: IP address of the SICK device

type: string

default: mySICKdevice

Port_Number:

description: Port on the SICK device. Usually 2111 or 2112.

type: number

default: 2112

Code-Sprache: YAML (yaml)We define the host address details of the SICK RFU620 device as parameters, so they are used as default, but can be customized in case we want to connect to a different device.

Resources

In the resources section we declare every resource that is needed for our application. The first resource we need is a connection to the SICK RFID sensor.

Cybus::Connection

resources:

sopasConnection:

type: Cybus::Connection

properties:

protocol: Sopas

connection:

host: !ref IP_Address

port: !ref Port_Number

Code-Sprache: YAML (yaml)After giving our resource a name – for the connection it is sopasConnection – we define the type of the resource and its type-specific properties. In case of Cybus::Connection we declare which protocol and connection parameters we want to use. For details about the different resource types and available protocols, please consult the Reference docs. For the definition of our connection we refer to the earlier declared parameters IP_Address and Port_Number by using !ref.

Cybus::Endpoint

The next resources needed are the endpoints which we supply data to or request from. Those are identified by the command names that we have selected earlier. Let’s add each SOPAS command by extending our list of resources with some endpoints.

inventory:

type: Cybus::Endpoint

properties:

protocol: Sopas

connection: !ref sopasConnection

subscribe:

name: QSinv

type: event

inventoryStart:

type: Cybus::Endpoint

properties:

protocol: Sopas

connection: !ref sopasConnection

write:

name: MIStartIn

type: method

inventoryStop:

type: Cybus::Endpoint

properties:

protocol: Sopas

connection: !ref sopasConnection

write:

name: MIStopIn

type: method

inventoryCheck:

type: Cybus::Endpoint

properties:

protocol: Sopas

connection: !ref sopasConnection

read:

name: QSIsRn

type: variable

inventoryRunning:

type: Cybus::Endpoint

properties:

protocol: Sopas

connection: !ref sopasConnection

subscribe:

name: QSIsRn

type: variable

feedbackLight:

type: Cybus::Endpoint

properties:

protocol: Sopas

connection: !ref sopasConnection

write:

name: HMISetFbLight

type: method

Code-Sprache: YAML (yaml)Each resource of the type Cybus::Endpoint needs a definition of the used protocol and on which connection it is rooted. Here you can easily refer to the previously declared connection by using !ref and its name. To define a SOPAS command we need to specify the desired operation as a property which can be read, write or subscribe and among this the command name and its interface type. The available operations are depending on the interface type:

| Type | Operation | Result |

|----------|-----------|--------------------------------------------|

| event | read | n/a |

| | write | n/a |

| | subscribe | Subscribes to asynchronous messages |

| method | read | n/a |

| | write | Calls a method |

| | subscribe | Subscribes to method's answers |

| variable | read | Requests the actual value of the variable |

| | write | Writes a value to the variable |

| | subscribe | Subscribes to the results of read-requests |

Code-Sprache: YAML (yaml)This means our endpoints are now defined as follows:

inventorysubscribes to asynchronous messages ofQSinvinventoryStartcalls the methodMIStartIninventoryStopcalls the methodMIStopIninventoryChecktriggers the request of the variableQSIsRninventoryRunningreceives the data fromQSIsRnrequested byinventoryCheckfeedbackLightcalls the methodHMISetFbLight

The accessMode is not a required property for SOPAS endpoints and is by default set to 0. But if you want to access specific SOPAS variables for write access which require a higher accessMode than the default 0 (zero), look up the suitable accessMode and its password in the SICK device documentation. Regarding the accessMode the Connectware supports the following values:

| Value | Name |

|-------|-------------------|

| 0 | Always (Run) |

| 1 | Operator |

| 2 | Maintenance |

| 3 | Authorized Client |

| 4 | Service |

Code-Sprache: YAML (yaml)Cybus::Mapping

To this point we would already be able to read values from the SICK RFID sensor and monitor them in the Connectware Explorer or on the default MQTT topics related to our service. To achieve a data flow that would satisfy the requirements of our integration purpose, we may need to add a mapping resource to publish the data on topics corresponding to our MQTT topic structure.

mapping:

type: Cybus::Mapping

properties:

mappings:

- subscribe:

endpoint: !ref inventory

publish:

topic: 'sick/rfid/inventory'

- subscribe:

topic: 'sick/rfid/inventory/start'

publish:

endpoint: !ref inventoryStart

- subscribe:

topic: 'sick/rfid/inventory/stop'

publish:

endpoint: !ref inventoryStop

- subscribe:

topic: 'sick/rfid/inventory/check'

publish:

endpoint: !ref inventoryCheck

- subscribe:

endpoint: !ref inventoryRunning

publish:

topic: 'sick/rfid/inventory/running'

- subscribe:

topic: 'sick/rfid/light'

publish:

endpoint: !ref feedbackLight

Code-Sprache: YAML (yaml)The mapping defines which endpoint’s value is published on which MQTT topic or the other way which MQTT topic will forward commands to which endpoint. In this example we could publish an empty message on topic sick/rfid/inventory/start to start RFID reading and publish an empty message on topic sick/rfid/inventory/stop to stop the reading process. While the reading (also referred to as inventory) is running, we continuously receive messages on topic sick/rfid/inventory containing the results of the inventory. Similarly when publishing an empty message on sick/rfid/inventory/check while having subscribed to sick/rfid/inventory/running, we will receive a message indicating if the inventory is running or not.

To provide parameters for variable writing or method calling you have to send them as a space-separated string. For instance, to invoke the method for controlling the integrated feedback light of the device just publish the following MQTT message containing a color and a mode parameter on topic sick/rfid/light: "1 2"

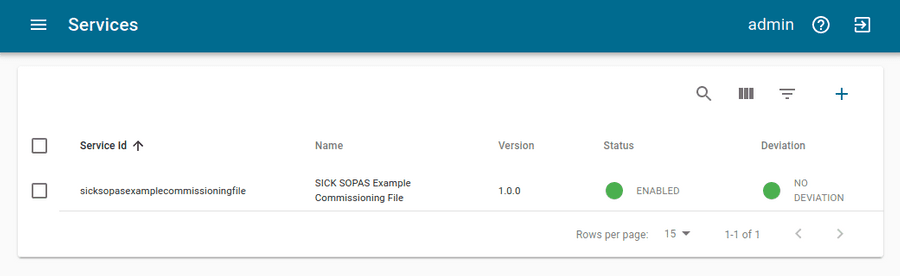

Installing the Commissioning File

You now have the Commissioning File ready for installation. Head over to the Services tab in the Connectware Admin UI and hit the(+) button to select and upload the Commissioning File. You will be asked to set values for each member of the section parameters or confirm the default values. With a proper written Commissioning File, the confirmation of this dialog will result in the installation of a Service, which manages all the resources we just defined: The SOPAS connection, the endpoints collecting data from the device and the mapping controlling where we can access this data. After enabling this Service you are good to go on and see if everything works out!

Verifying the Data

The data is provided in JSON format and messages published on MQTT topics contain the keys "timestamp" and "value" like the following:

Although it is not represented by the Explorer view, on MQTT topics the data is provided in JSON format and applications consuming the data must take care of JSON parsing to pick the desired property. The messages published on MQTT topics contain the keys "timestamp" and "value" like the following:

{

"timestamp": 1581949802832,

"value": "sSN QSinv 1 0 8 *noread* 0 0 0 0 0 AC"

}

Code-Sprache: YAML (yaml)This particular example is an inventory message published on topic sick/rfid/inventory. You recognize its "value" is a string in the form of the SOPAS protocol containing some values and parameters. This is the original message received from the SICK device which means you still have to parse it according to SOPAS specifications. The Connectware offers a feature that can easily help you along with this task and we will take a look at it in the next step.

Utilizing the Rule Engine

For this lesson we will demonstrate the concept of the Rule Engine with a simpler example than the above. We will look at the answer to a variable read request, which is more intuitively to read since those messages usually only contain the variable’s value. For instance, this is the answer to inventoryCheck on endpoint inventoryRunning:

{

"timestamp": 1581950874186,

"value": "sRA QSIsRn 1"

}

Code-Sprache: YAML (yaml)The SOPAS string contains just the command type (sRA = read answer), the variable name (QSIsRn) and the value 1 indicating that the inventory is running. But essentially we care about the value, because the command type is no further interesting for us and the information about the variable name/meaning is implied in the topic (sick/rfid/inventory/running). If this message is so easy to interpret for us, it can’t be too complicated for the Connectware – we just need the right tools! And that is where the Rule Engine comes into play.

A rule is used to perform powerful transformations of data messages right inside the Connectware data processing. It enables us to parse, transform or filter messages on the base of simple to define rules, which we can append to endpoint or mapping resource definitions. We will therefore extend the inventoryRunning resource in our Commissioning File as follows:

inventoryRunning:

type: Cybus::Endpoint

properties:

protocol: Sopas

connection: !ref sopasConnection

subscribe:

name: QSIsRn

type: variable

rules:

- transform:

expression: |

{

"timestamp": timestamp,

"value": $number($substringAfter(value, "sRA QSIsRn "))

}

Code-Sprache: YAML (yaml)We add the property rules and define a rule of the type transform by giving the expression which is a string in the form of JSONATA language. Rules of the type transform are direct transformations, generating for every input message, exactly one output message. The principle of the expression is, that you construct an output JSON object in which you can refer to keys of the input object (the JSON message of this endpoint) and apply a set of functions to modify the content. Please note that the pipe | is not part of the expression but a YAML specific indicator for multiline strings, denoting to keep newlines as newlines instead of replacing them with spaces.

We want to keep the form of the input JSON object, so we again define the key "timestamp" and reference its value to timestamp of the input object to maintain it. We also define the key "value" but this time we do some magic utilizing JSONATA functions:

$number(arg)casts theargparameter to a number if possible$substringAfter(str, chars)returns the substring after the first occurrence of the character sequencecharsinstr

The second function will reduce the SOPAS string we refer to with value by returning just the characters after the string "sRA QSIsRn", in our case a single digit, which is then cast to a number by the first function. We can apply these functions in this way, because we know that the string before our value will always look the same. For more information about JSONATA and details about the functions see https://docs.jsonata.org/overview.html.

After installing the new Service with the modified Commissioning File, we do now receive the following answer to an inventoryCheck request (while inventory is running):

{

"timestamp": 1581956395025,

"value": "1"

}

Code-Sprache: YAML (yaml)This value is now ready-to-use and applications working with this data do not have to care about any SOPAS parsing!

The Connectware supports some more types of rules. To give you a hint, what might be possible, here is a quick overview:

parse– parse any non JSON data to JSONtransform– transform payloads into new structurefilter– break the message flow, if the expression evaluates to a false valuesetContextVars– modify context variablescov– Change-on-Value filter that only forwards data when it has changedburst– burst-mode that allows aggregation of many messagesstash– stash intermediate states of messages for later reference

For more information about rules and the Rule Engine check out the Connectware Docs.

Summary

We started this lesson with a few SOPAS basics and learned which information about the SOPAS interface is required to define the Commissioning File for the integration of our SICK RFU620. Then we created the Commissioning File and specified the SOPAS connection, the endpoints and the MQTT mapping. Utilizing the Commissioning File we installed a Service managing those resources in the Connectware and monitored the data of our device in the Explorer of the Admin UI. And in the end we had a quick look at possibilities of preprocessing data using the Rule Engine to get ready-to-use values for our application.

Where to Go from Here

The Connectware offers powerful features to build and deploy applications for gathering, filtering, forwarding, monitoring, displaying, buffering, and all kinds of processing data… you could also build a dashboard for instance? For guides checkout more of Cybus Learn.

Prerequisites

This lesson will explain how to connect and use an MQTT client utilizing Cybus Connectware. To understand the basic concepts of Connectware, check out the Technical Overview lesson. To follow along with the example, you will also need a running instance of Connectware. If you don’t have that, learn Installing Connectware. And finally this article is all about communication with MQTT clients. So unlikely but if you came here without ever having heard about MQTT, head over to our MQTT Basics lesson.

Introduction

This article will teach you the integration of MQTT clients. In more detail, the following topics are covered:

- Creating client credentials and permissions

- Using the client registration feature

- Establishing connection with a client

- Publishing data

- Subscribing data

Selecting the Tools

In this lesson we will utilize a couple of MQTT clients, each fulfilling a different purpose and therefore all having their right to exist.

Mosquitto

Mosquitto is an open source message broker which also comes with some handy client utilities. Once installed on your system it provides the mosquitto_pub and mosquitto_sub command line MQTT clients which you can use to perform testing or troubleshooting, carried out manually or scripted.

MQTT Explorer

MQTT Explorer is an open-source MQTT client that provides a graphical user interface to communicate with MQTT brokers. It offers convenient ways of configuring and establishing connections, creating subscriptions or publishing on topics. The program presents all data clearly and in well organized sections but also enables the user to select which data should appear on the monitor, should be hid or dumped in a file. Additionally it provides tools to decode the payload of messages, execute scripts or track the broker status.

Workbench

The Workbench is part of the Cybus Connectware. It is a flow-based, visual programming tool running a Node-RED instance to create data flows and data pre-processing on level of Connectware. One way to access data on Connectware utilizing the Workbench is via MQTT. The Workbench provides publish and subscribe nodes which serve as data sources or sinks in the modeled data flow and allows users to build applications for prototyping or debugging. But it is capable of a lot of more useful functions and nodes, for instance creating dashboards.

Configuring Connectware

To connect users to Connectware, they need credentials for authorization. There are two ways to create credentials:

- Via the Edit User dialog.

- Via the client registration feature.

Creating Client Credentials and Permissions

- On the navigation panel, click User.

- Click Add User.

- In the Create User dialog, enter the Username and Password. For this example, we create the following users. The user for our Mosquitto client will be created in the following section using the client registration feature.

- Before a user can access a MQTT topic, the user must be granted

read,write, orreadWritepermissions. These are equivalent to subscribe, publish, or subscribe and publish. In the Users and Roles list, click the user for which you want to add permissions. - In the Edit User dialog, click Advanced Mode.

- Click the + icon to open the Add Permissions panel.

- Click MQTT.

- In the Topic field, enter

clients/#. This gives the selected user access to theclientstopic. The wildcard#gives access to any topic that is hierarchically belowclients. For example,clients/status/active. - Click Add.

- Click Update.

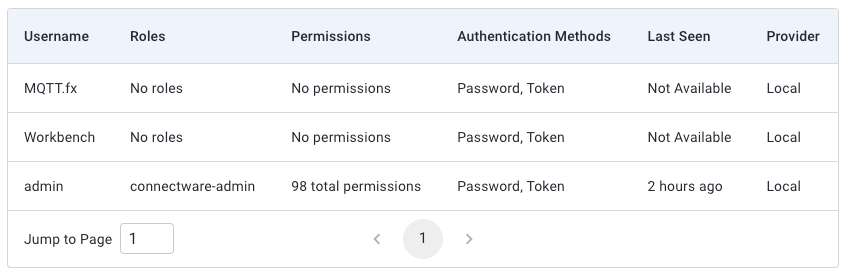

Using the Client Registration Feature

The second way of creating credentials for a user is the client registry process (also referred to as self registration). There are two variants of this process: The implicit form works with any MQTT client while the explicit form is for clients that use the REST API. In this lesson we will only look at the implicit variant. If you want to learn more on self registration and both of its forms, please consult the Reference docs.

We will now step through the process of implicit client registration. At first we need to activate the self registration: In the Connectware Admin UI navigate to User > Client Registry and click Unlock. This will temporarily unlock the self registration for clients. The next step is that the client tries to connect to Connectware with a username that does not yet exist.

We utilize the mosquitto_sub client for this which is especially easy because we just need to use the command mosquitto_sub -h localhost -p 1883 -u Mosquitto -P 123456 -t clients assuming we are running Connectware on our local machine and want to register the user Mosquitto with the password 123456 (you should of course choose a secure password). The topic we chose using option -t is not relevant, this option is just required for issuing the command. We will explain this command in detail in the section about subscribing data.

Shortly after we have issued this command and it exited with the message „Connection error: Connection Refused: not authorized.“ we can look at the Client Registry in the Admin UI and see the connection attempt of our Mosquitto client.

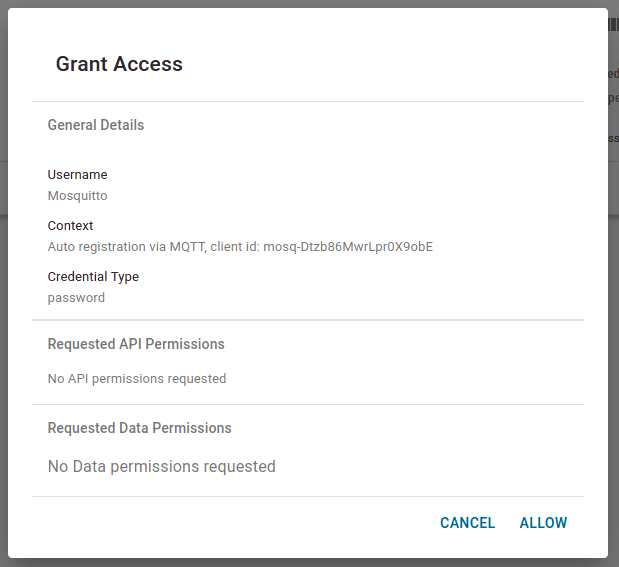

Clicking an entry in the list opens the Grant Access dialog. This summarizes the details of the request and the permissions requested. You can only request permissions using the explicit method. To grant access to this client and create a user, click Allow.

On the navigation bar, click User to see the newly created user „Mosquitto“. Since we are using the implicit method we now need to manually add permissions to the accessible topics as we did before.

Establishing Connection with a Client

We are now ready to connect our clients to Connectware via MQTT.

Mosquitto

We already made use of the client functions of Mosquitto by utilizing it for our self registration example. That also suggested that we do not explicitly need to establish a connection before subscribing or publishing, this process is included in the mosquitto_sub and mosquitto_pub commands and we supply the credentials while issuing.

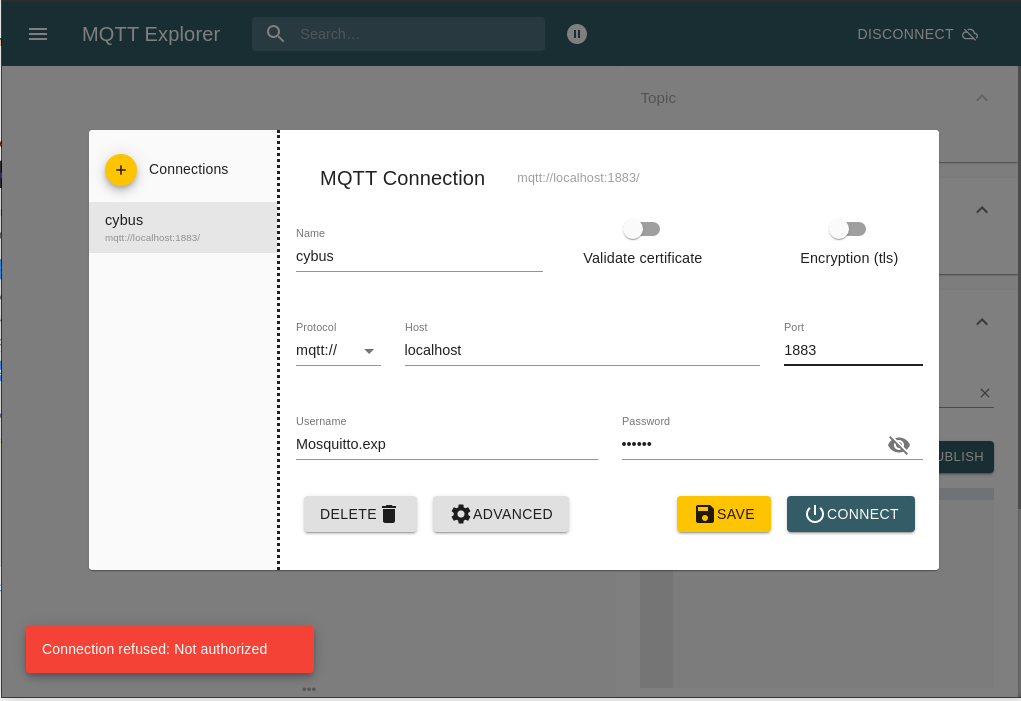

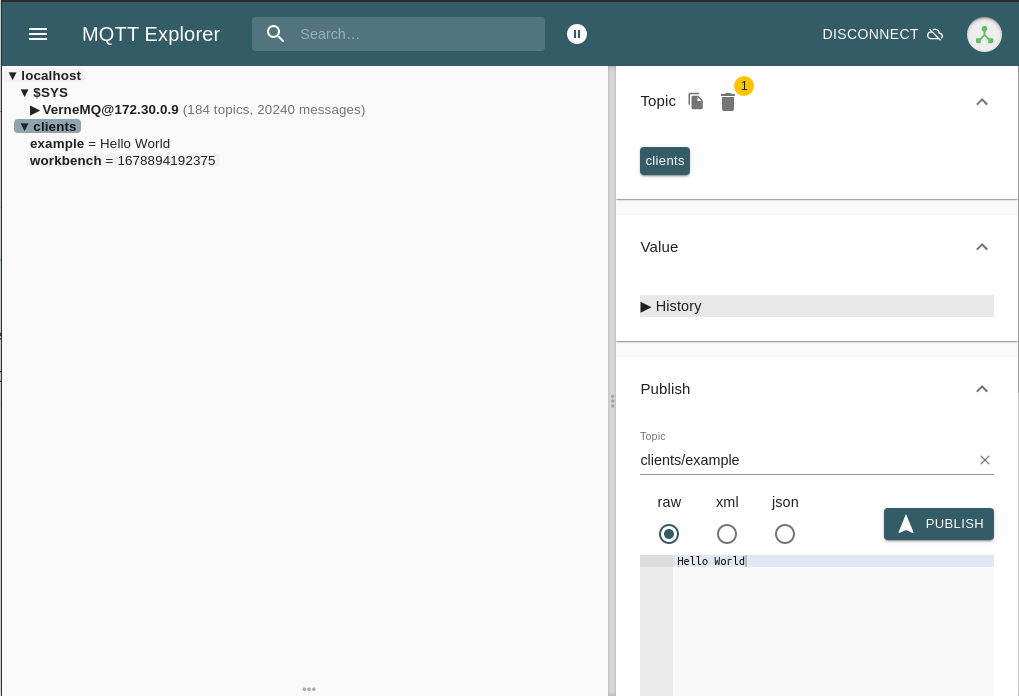

MQTT Explorer

Using MQTT Explorer, we need to configure the connection.

First we create a new connection by clicking the plus (+) in the top left corner. We name the new profile „cybus“ and define the host as „localhost“ (exchange this with the address you are running your Connectware on). Then fill out the user credentials. All other settings can remain default for this example. We also need to perform client registry since it is another client otherwise we get an error as show at the bottom left corner.

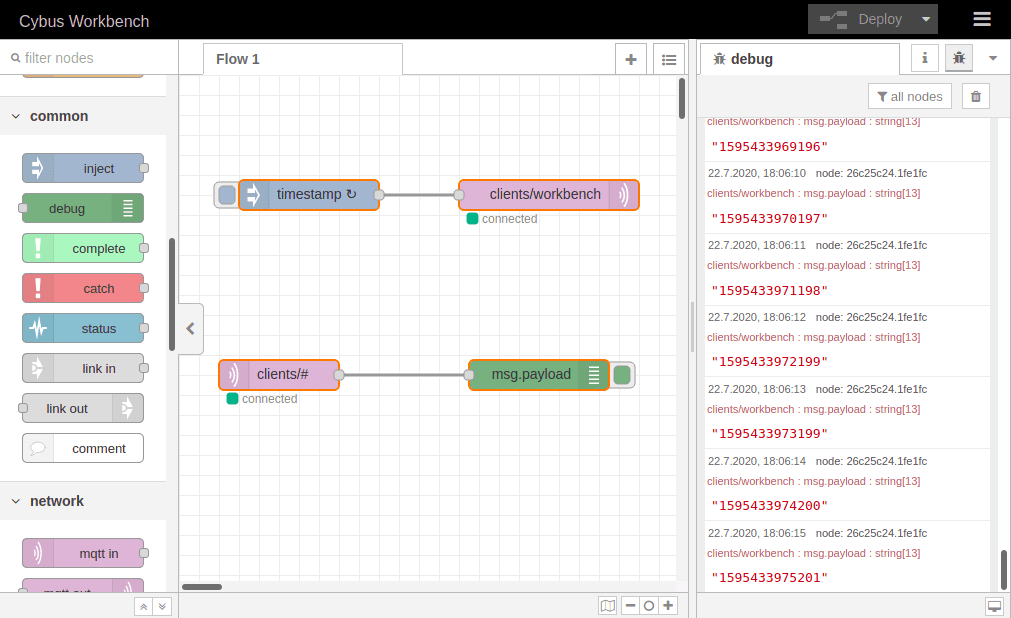

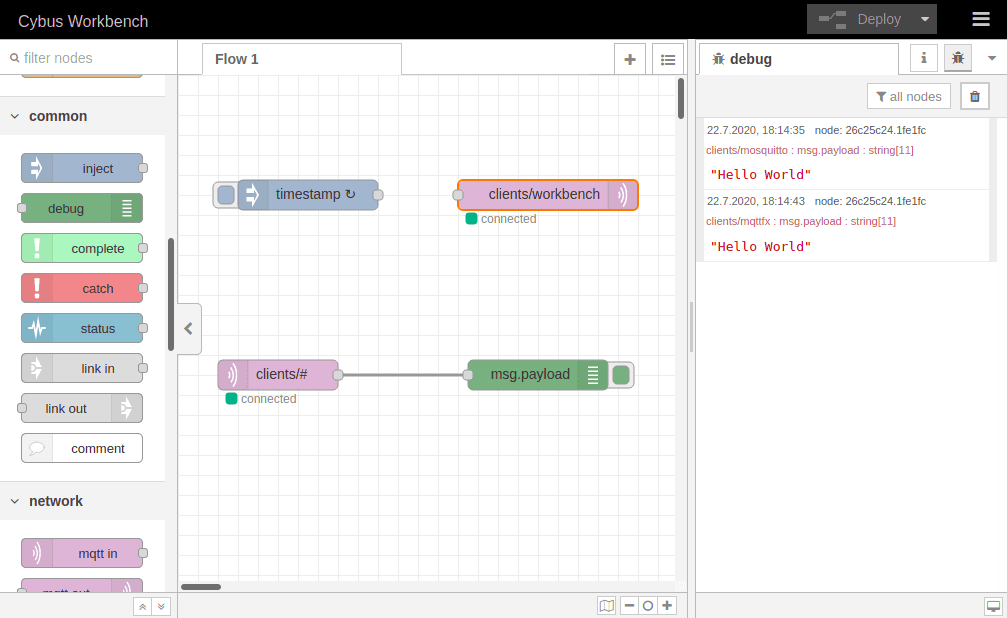

Workbench

The Workbench can be accessed through the Admin UI by clicking Workbench in the navigation bar. A new tab will open showing the most recent edited flow of our Workbench. If it is empty you can use this otherwise click the (+) button to the upper right of the actual shown flow. If you are new to the Workbench, we won’t go into details about the functions and concepts in this lesson but it will demonstrate the most simple way of monitoring data with it.

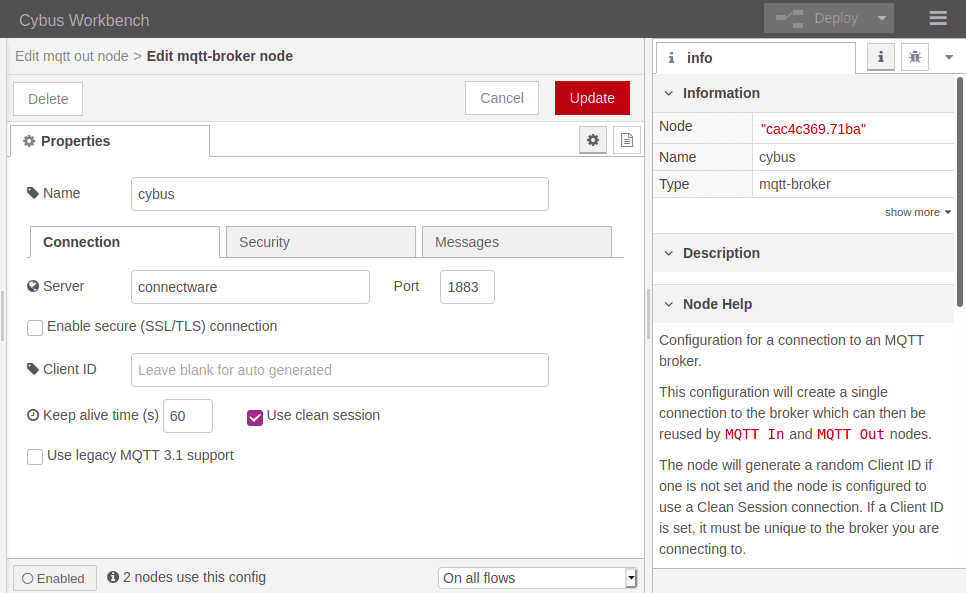

Take a look at the left bar: There you see the inventory of available nodes. Scrolling down you will find the section network containing two MQTT nodes among others. The so-called mqtt in and mqtt out nodes represent the subscribe and publish operations. Drag the mqtt out node and drop it on your flow, double-clicking it will show you its properties. The drop-down-menu of the Server property will allow you to add a new mqtt broker by clicking the pencil symbol next to it.

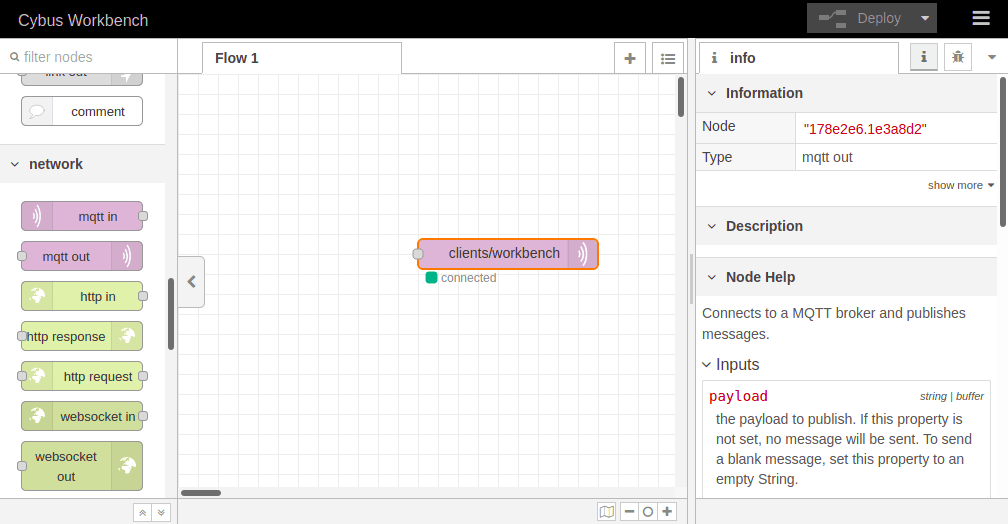

We name this new connection „cybus“ and define the server address as connectware. This is the way to address the local running Connectware, you won’t be able to access it using localhost! Now switch to the Security tab and fill in username and password. Save your changes by clicking Add in the upper right corner. Make sure the just created configuration is active for the Server property. For the property Topic we choose the topic we selected for our clients: clients/workbench. The other properties will keep their default settings. Click Done in the upper right corner.

To apply the changes we just made click the button Deploy in the upper right corner. This will reset our flow and start it with the latest settings. You will now see that the MQTT node is displayed as „connected“, assuming everything was configured correctly. We successfully established a connection between the Workbench and our Connectware.

Publishing Data

Publishing data allows us to make it available. It does not necessarily mean that someone is actually receiving it but anyone with the permission to subscribe on the concerning topic could.

Mosquitto

Using Mosquitto publishing data can be achieved by a single command line utilizing mosquitto_pub. For this example we want to connect to Connectware using the following options:

- Host to connect to:

-h localhost - Port to connect to:

-p 1883 - Username:

-u Mosquitto - Password:

-P 123456 - Topic to publish on:

-t clients/mosquitto - Message:

-m "Hello World"

mosquitto_pub -h localhost -p 1883 -u Mosquitto -P 123456 -t clients/mosquitto -m "Hello World"

If successful the command will complete without any feedback message. We could not confirm yet if our message arrived where we expected it. But we will validate this later when coming to subscribing data.

MQTT Explorer

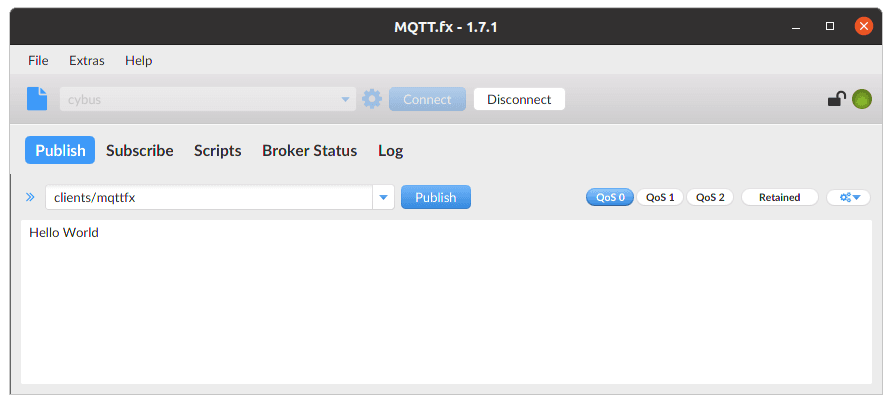

Publishing with MQTT.fx is even simpler once the connection is configured and a connection is established (indicated by the gray/green circle in the upper right corner). If the indicator shows green, we are connected and ready to shoot some messages.

Switch to the Publish section. In the input line next to the Publish-button you define the topic you want to publish to. We will go for the topic clients/mqttfx. Then you click the big, blank box below and type a message, e.g. „Hello World“. To publish this message click Publish.

Again we have no feedback if our message arrived but we will take care of this in the Subscribing data section.

Configure the Workbench as Data Source

We already added a publishing node to our flow when we established a connection to Connectware and configured it to publish on clients/workbench. Having this set the Workbench is ready to publish but the messages are still missing.

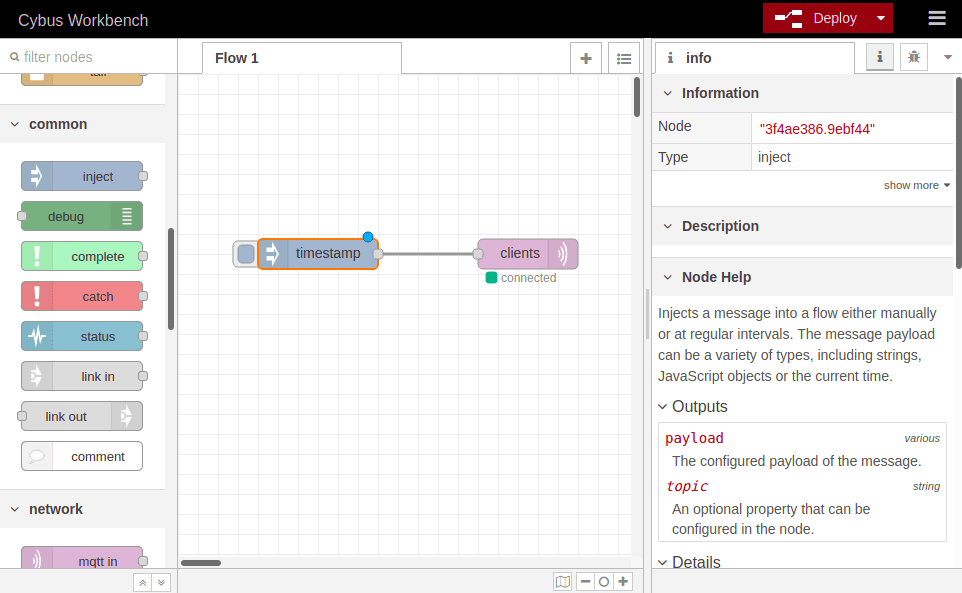

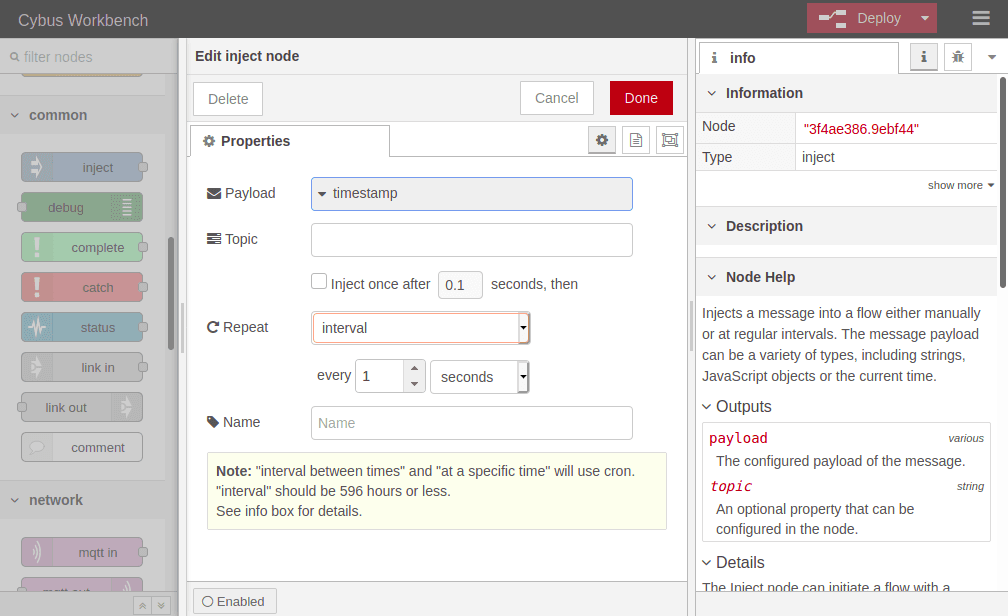

At this point we want to create a data source in our flow which periodically publishes data through the mqtt out node. We simply drag an inject node from the nodes bar into our flow and drop it left to the mqtt out node. Now we can draw a connection from the small gray socket of one to the other node.

Double-clicking the inject node, which is now labeled „timestamp“, shows its properties. We can define the payload, but we will keep „timestamp“, declare a topic to publish to, which we leave blank because we defined the topic in out MQTT node, and we can set if and how often repeated the message should be injected in our flow. If we do not define repeating a message will be injected only if we click the button on the left of the inject node in our flow.

We set repeating to an „interval“ of every second and confirm the settings by clicking Done in the upper right corner. Now we have to Deploy again to apply our changes. We still have no confirmation that everything works out but we are confident enough to go on and finally subscribe to the data we are generating.

Subscribing Data

Subscribing data means that the client will be provided with new data by the broker as soon as it is available. Still we do not have a direct connection to the publisher, the broker is managing the data flow.

Mosquitto

We already used mosquitto_sub to self register our client as a user. We could have also used mosquitto_pub for this but now we want to make use of the original purpose of mosquitto_sub: Subscribing to an MQTT broker. Not any broker but the broker of our Connectware.

We are using the following options:

- Host to connect to:

-h localhost - Port to connect to:

-p 1883 - Username:

-u Mosquitto - Password:

-P 123456 - Topic:

-t clients/workbench

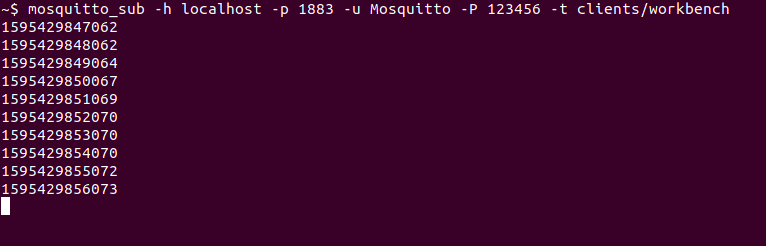

mosquitto_sub -h localhost -p 1883 -u Mosquitto -P 123456 -t clients/workbench

Again make sure that you have specified the address of your Connectware as host which does not necessarily have to be your local machine! We will subscribe to the topic clients/workbench where we are publishing the messages from our Workbench.

And Tada! The mosquitto client now shows us every new message published on this topic – in this case the timestamps generated by our Workbench.

MQTT Explorer

Already having the connection to our Connectware established it is a piece of cake to subscribe to a topic. On the left side under localhost, we can see the messages coming:

And there you see finely presented all the data flying in. You can also add more topics to be monitored simultaneously and manage which should be presented to you or be dumped in a file.

Workbench

Eventually we will utilize the Workbench to monitor our data. But why just monitor it? The Workbench has a lot more to offer and exploring it will quickly give you a hint which possibilities lie ahead. But we will focus on that in another lesson.

At first we add an mqtt in node to the flow where we already created the data generator. Double-clicking it we choose the previously configured server „cybus“ and the topic clients/#. The wildcard # defines, that we subscribe to every topic under clients. Be careful using wildcards since you could accidentally subscribe to a lot of topics which could possibly cause very much traffic and a message output throwing data from all the topics in a muddle. Confirm the node properties by clicking Done and add a debug node to the flow. Draw a connection from the mqtt in to the debug node and Deploy the flow.

Click the bug symbol in the upper right corner of the information bar on the right. We see there are already messages coming in: They are the timestamp messages created in the very same flow but taking a detour via the MQTT broker before plopping in our debug window. They are messing up the show so let us just cut the connection between the „timestamp“ node and the publish node by selecting the connection line and pressing Del and Deploy the flow one more time.

Now that we have restored the quiet, let us finally validate if publishing with mosquitto_pub and MQTT.fx even works out. So issue the mosquitto_pub command again and also open MQTT.fx and publish a message like we did in the section before.

Et voilà! We can see two „Hello World“ messages in our debug window and looking at the topics they were published on (written small in red right above the message text) we learn that one came from Mosquitto and the other from MQTT.fx.

Summary

This was quite a journey! We started in the realms of Connectware, created credentials and permissions, learned about the magic of self registration and the trinity of MQTT clients. We went on and established connections between clients and brokers and sent messages to unobserved topics, struggling with the uncertainty of whether their destinations will ever be reached. But we saw light when we discovered the art of subscribing and realized that our troubles had not been in vain. In the end we received the relieving and liberating call of programmers: „Hello World“!

Where to Go from Here

Connectware offers powerful features to build and deploy applications for gathering, filtering, forwarding, monitoring, displaying, buffering, and all kinds of processing data… why not build a dashboard for instance? For guides check out more of Cybus Learn.

Prerequisites

We assume that you are familiar with the following topics:

- Service Basics Lesson

- Siemens SIMATIC S7 PLCs

- YAML file format

- MQTT

Introduction

This lesson goes through the required steps to connect and use your Siemens SIMATIC S7 device with Cybus Connectware. Following this tutorial will enable you to connect and use your own SIMATIC S7 device on Connectware with ease!

The SIMATIC S7 is a product line of PLCs by Siemens that are widely used in industrial automation. The S7 is capable of connecting several sensors and actuators through digital or analog IOs which can be modularly extended.

Reading and writing data on the PLC can be realized through the S7 communication services based on ISO-on-TCP (RFC1006). In this case the PLC acts as a server allowing communication partners to access PLC data without the need of projecting the incoming connections during PLC programming. We will use this feature to access the S7 from Connectware.

Setup

To follow this lesson you need to have a computer, a running Cybus Connectware instance and one of the following:

A Siemens S7 PLC and access to STEP7 (TIA Portal)

- The S7 PLC needs to be configured using STEP7 in order to work correctly. The following configuration settings on your S7 device are needed:

- To activate the S7 Communication Services you need to enable PUT/GET access in PLC Settings. You should keep in mind that this opens up the controller access by other applications as well.

- To access data from data blocks you need to disable “Optimized Block Access” in data block attributes.

Conpot PLC Emulator

- Conpot can be used to emulate a Siemens S7 PLC.

Service Commissioning File Example

You can download the service commissioning file that we use in this example from the Cybus GitHub repository.

Writing the Commissioning File

The YAML based Commissioning File tells Cybus Connectware the type of device to be connected, its connection configuration and it specifies the endpoints that should be accessed. Commissioning File details can be found in the Reference docs. For now let’s focus on the three main resources in the file, which are:

- Cybus::Connection

- Cybus::Endpoint

- Cybus::Mapping

In the following chapters we will go through the three resources and create an example Commissioning File in which we connect to an S7 device and enable read/write access to a data endpoint.

Cybus::Connection

Inside of the resource section of the commissioning file we define a connection to the device we want to use. All the information needed for Connectware to talk to the device is specified here. This information for example includes the protocol to be used, the IP address and so on. Our connection resource could look like the following:

# ----------------------------------------------------------------------------#

# Connection Resource - S7 Protocol

# ----------------------------------------------------------------------------#

s7Connection:

type: Cybus::Connection

properties:

protocol: S7

connection:

host: 192.168.10.60

port: 102

rack: 0

slot: 1

pollInterval: 1000

Code-Sprache: YAML (yaml)We define that we want to use the Cybus::Connection resource type, which tells Connectware that we want to create a new device connection. To define what kind of connection we want to use, we are specifying the S7 protocol. In order to be able to establish a connection to the device, we need to specify the connection settings as well. Here, we want to connect to our S7 device on the given host IP, port, rack and slot number.

Furthermore, we specified that the pollIntervall for reading the data will be set to one second.

Cybus::Endpoint

We want to access certain data elements on the PLC to either read data from or write data to the device. Similar to the Connection section of the commissioning file, we define an Endpoint section:

# ----------------------------------------------------------------------------#

# Endpoint Resource - S7 Protocol

# ----------------------------------------------------------------------------#

s7EndpointDB1000:

type: Cybus::Endpoint

properties:

protocol: S7

connection: !ref s7Connection

subscribe:

address: DB10,X0.0

Code-Sprache: YAML (yaml)We define a specific endpoint, which represents any data element on the device, by using the Cybus::Endpoint resource. Equally to what we have done in the connection section, we have to define the protocol this endpoint is relying on, namely the S7 protocol. Every endpoint needs a connection it belongs to, so we add a reference to the earlier created connection by using !ref followed by the name of the connection resource. Finally, we need to define which access operation we would like to perform on the data element and on which absolute address in memory it is stored at. In this case subscribe is used, which will read the data from the device in the interval defined by the referenced connection resource.

The data element which is to be accessed here is located on data block 10 (DB10) and is a single boolean (X) located on byte 0, bit 0 (0.0). If you wish to learn more on how addressing works for the S7 protocol please refer to our documentation here.

Cybus::Mapping

Now that we can access our data-points on the S7 device we want to map them to a meaningful MQTT topic. Therefore we will use the mapping resource. Here is an example:

# ----------------------------------------------------------------------------#

# Mapping Resource - S7 Protocol

# ----------------------------------------------------------------------------#

mapping:

type: Cybus::Mapping

properties:

mappings:

- subscribe:

endpoint: !ref s7EndpointDB1000

publish:

topic: !sub '${Cybus::MqttRoot}/DB1000'

Code-Sprache: YAML (yaml)Our mapping example transfers the data from the endpoint to a specified MQTT topic. It is very important to define from what source we want the data to be transferred and to what target. The source is defined by using subscribe and setting endpoint to reference the endpoint mentioned above. The target is defined by using publish and setting the topic to the MQTT topic where we want the data to be published on. Similarly to using !ref in our endpoint, here we use !sub which substitutes the variable within ${} with its corresponding string value.

Interim Summary

Adding up the three previous sections, a full commissioning file would look like this:

description: >

S7 Example

metadata:

name: S7 Device

resources:

# ----------------------------------------------------------------------------#

# Connection Resource - S7 Protocol

# ----------------------------------------------------------------------------#

s7Connection:

type: Cybus::Connection

properties:

protocol: S7

connection:

host: 192.168.10.60

port: 102

rack: 0

slot: 1

pollInterval: 1000

# ----------------------------------------------------------------------------#

# Endpoint Resource - S7 Protocol

# ----------------------------------------------------------------------------#

s7EndpointDB1000:

type: Cybus::Endpoint

properties:

protocol: S7

connection: !ref s7Connection

subscribe:

address: DB10,X0.0

# ----------------------------------------------------------------------------#

# Mapping Resource - S7 Protocol

# ----------------------------------------------------------------------------#

mapping:

type: Cybus::Mapping

properties:

mappings:

- subscribe:

endpoint: !ref s7EndpointDB1000

publish:

topic: !sub '${Cybus::MqttRoot}/DB1000'

Code-Sprache: YAML (yaml)Writing Data

Usually we also want to write data to the device. This can easily be accomplished by defining another endpoint where we use write instead of subscribe.

s7EndpointDB1000Write:

type: Cybus::Endpoint

properties:

protocol: S7

connection: !ref s7Connection

write:

address: DB10,X0.0

Code-Sprache: YAML (yaml)We also append our mappings to transfer any data from a specific topic to the endpoint we just defined.

mapping:

type: Cybus::Mapping

properties:

mappings:

- subscribe:

endpoint: !ref s7EndpointDB1000

publish:

topic: !sub '${Cybus::MqttRoot}/DB1000'

- subscribe:

topic: !sub '${Cybus::MqttRoot}/DB1000/set'

publish:

endpoint: !ref s7EndpointDB1000Write

Code-Sprache: YAML (yaml)To actually write a value, we just have to publish it on the given topic. In our case the topic would be services/s7device/DB1000/set and the message has to look like this:

{

"value": true

}

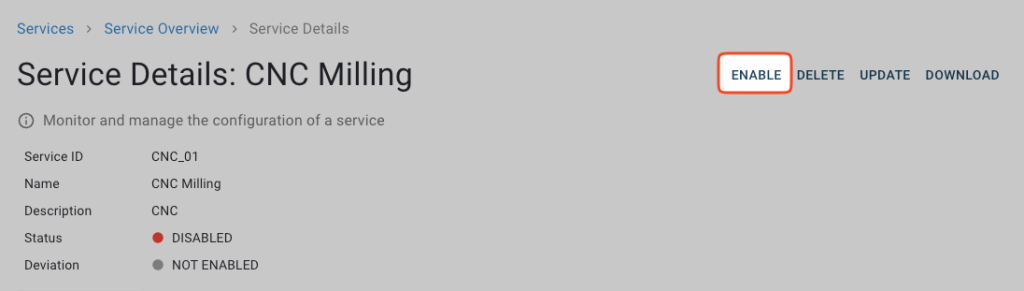

Code-Sprache: YAML (yaml)Commission the Device on Connectware

We are finally ready to connect to our Siemens S7 PLC and use it.

- On the navigation panel, click Services.

- Click Upload Service.

- In the Create Service dialog, click Choose File and select commissioning file that we just created.

- Click Install to install the service. The status section indicates the health of the service and the resources it defines.

- Once the service is installed, you must enable it. To enable a service, select it in the list and click Enable.

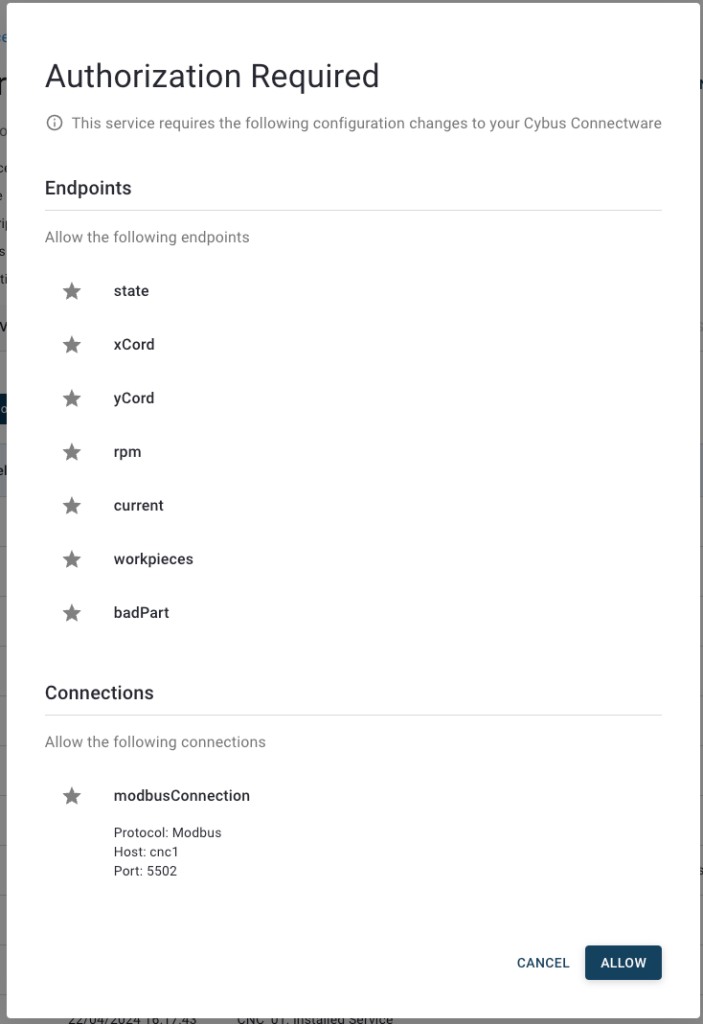

- You must authorize all permissions the service needs to operate. Make sure that all permissions are as intended and click Allow to enable the service.

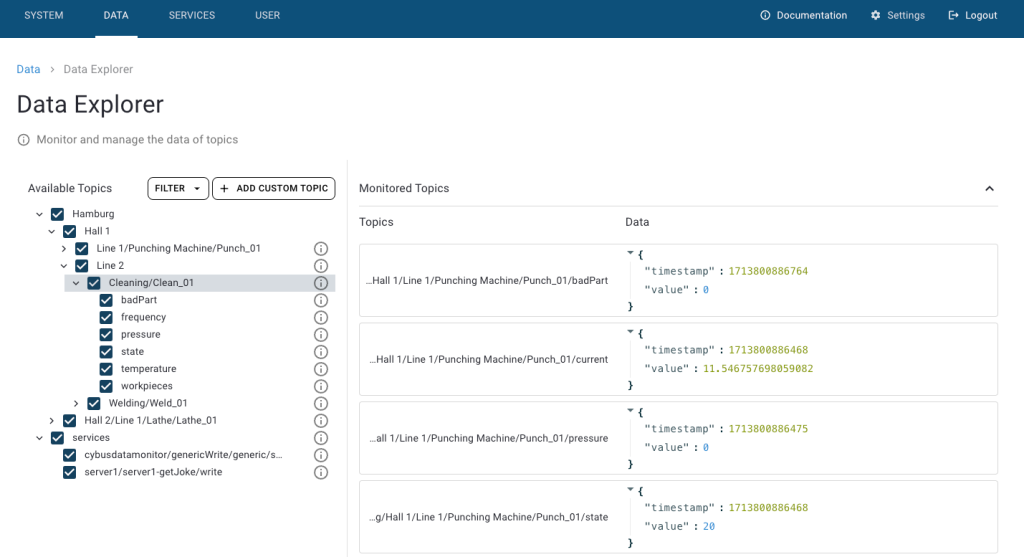

- To see the incoming data, click Data on the navigation panel. In the Data Explorer list, you can see the MQTT topic we specified in the commissioning file. To see data from a specific topic/endpoint, navigate to the corresponding topic in Available Topics list. This makes Connectware subscribe to topic and display its values on the Monitored Topics. In the History section on the bottom, you can monitor all data received since you subscribed to the topics.

- To see a value change, you can use any MQTT client (e.g. Mosquitto) to publish a new value to the corresponding topic where data is to be written, as described in the Writing Data section.

Summary

In this Cybus Learn article we learned how to connect and use an S7 device on Connectware. See Example Project Repo for the complete Commissioning File. If you want to keep going and get started with connecting your own S7 device with custom addressing, please visit the Reference docs to get to know all the Connectware S7 protocol features.

Going Further

A good point to go further from here is the Service Basics Lesson, which covers how to use data from your S7 device.

Disclaimer:

Step7, TIA Portal, S7, S7-1200, Sinamics are trademarks of Siemens AG

Prerequisites

This guide is to help you connect to an OPC UA server, using Connectware. It assumes that you understand the fundamental Connectware concepts. For that you can check out the Technical Overview. To follow the example, you should have a Connectware instance running. If you don’t have that, learn Installing Cybus Connectware. Although we focus on OPC UA here, you should also be familiar with MQTT. If in doubt, head over to MQTT Basics.

Introduction

You will learn to integrate OPC UA servers into your Connectware use case. Everything you need to get started will be covered in the following topics:

- Browsing the OPC UA address space

- Identifying OPC UA datapoints

- Mapping OPC UA data to MQTT

- Verifying data in the Connectware Explorer

Service YAMLs used in this lesson are available on GitHub.

Selecting the Tools

OPC UA Server

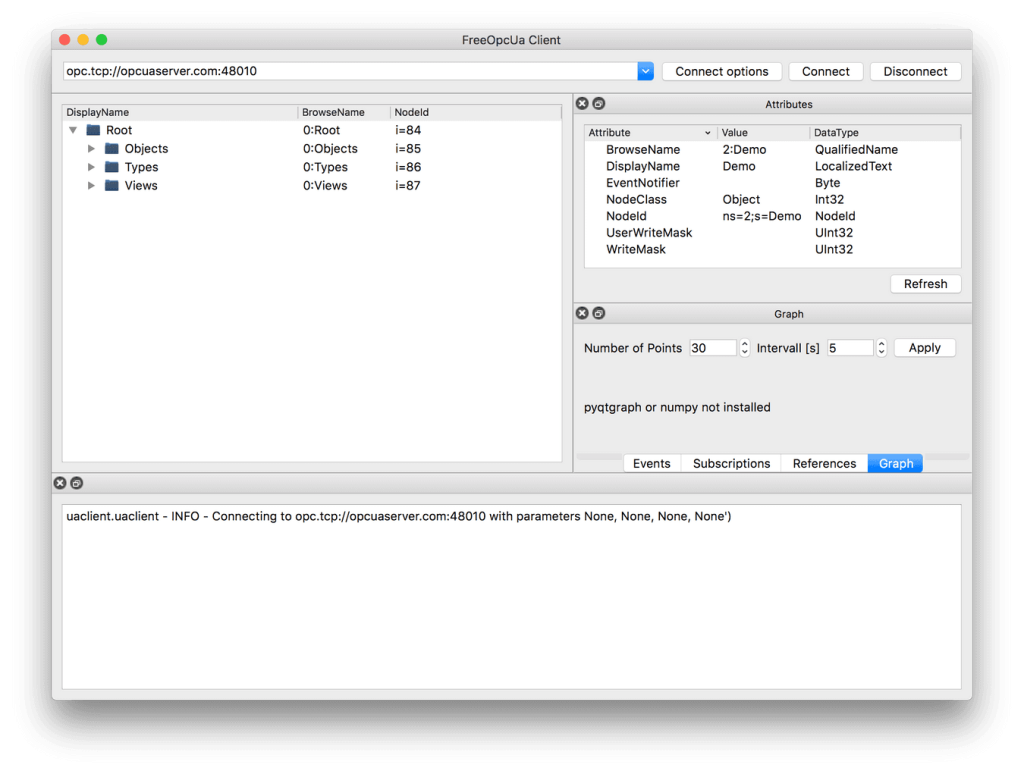

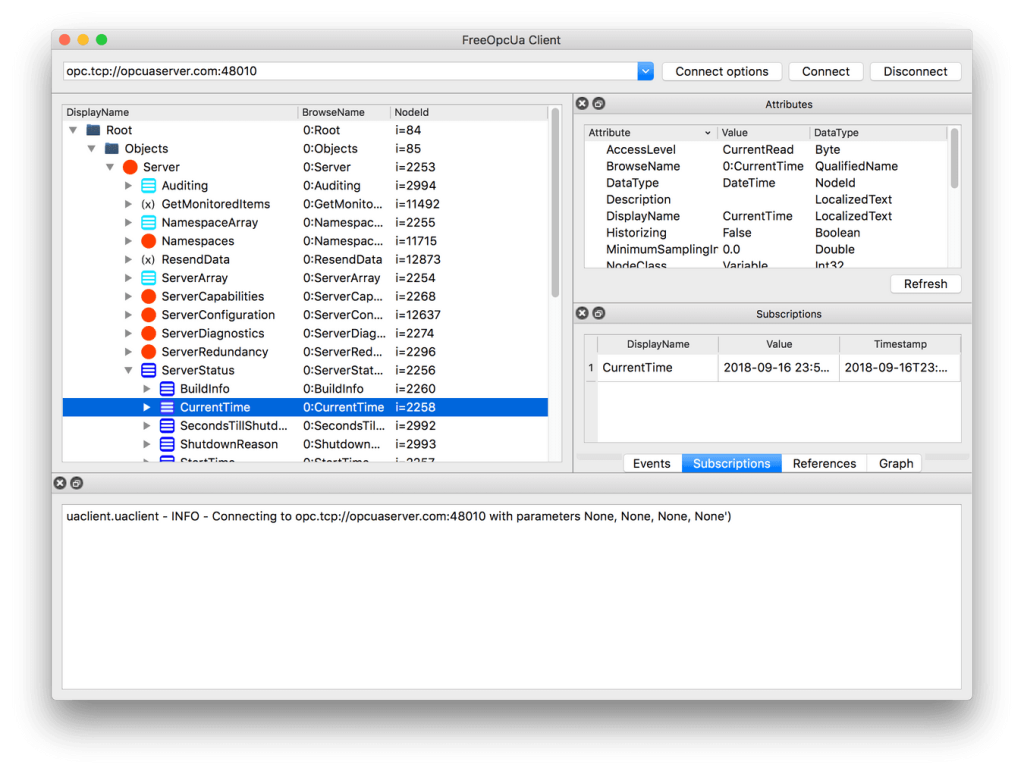

Our example utilizes the OPC UA server at opcuaserver.com, because it is publicly available. You can of course bring your own device instead.

OPC UA Browser