Prerequisites

- Connectware is already installed and configured.

- Connectware license key.

- Helm V3 installed (Helm | Installing Helm).

kubectlinstalled (Install Tools).kubectlconfigured with the current context pointing to your target cluster (Configure Access to Multiple Clusters).- A Kubernetes namespace that will be used.

Adding the Helm Chart Repository

Add the Cybus connectware-helm repository to your local Helm installation to use the connectware-agent Helm chart to install Connectware agents in Kubernetes:

helm repo add cybus https://repository.cybus.io/repository/connectware-helm

Code-Sprache: YAML (yaml)To verify that the Helm chart is available you can execute a Helm search:

helm search repo connectware-agent

Code-Sprache: YAML (yaml)| NAME | CHART VERSION | APP VERSION | DESCRIPTION |

| cybus/connectware-agent standalone agents | 1.0.0 | 1.1.5 | Cybus Connectware |

Creating Your values.yaml File

As with all Helm charts, the connectware-agent chart is configured using a YAML file. This file can have any name, however we will refer to it as the values.yaml file.

Create this file to start configuring your agent installation by using your preferred editor:

vi values.yaml

Code-Sprache: YAML (yaml)Installing a Single Agent

To quickly install a single agent you only need to add your Connectware license key to your values.yaml file as the Helm value licenseKey:

licenseKey: <your-connectware-license-key>

Code-Sprache: YAML (yaml)You can now use this file to deploy your Connectware agent in a Kubernetes namespace of your choice:

helm upgrade -i connectware-agent cybus/connectware-agent -f values.yaml -n <namespace>

Code-Sprache: YAML (yaml)Output

Release "connectware-agent" does not exist. Installing it now.

NAME: connectware-agent

LAST DEPLOYED: Mon Mar 13 14:31:39 2023

NAMESPACE: connectware

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

Thank you for using the Cybus Connectware agent Helm chart!

For additional information visit https://cybus.io/

Number of agents: 1

--------------------

- agent

If any of these agents are new, please remember to visit Connectware's client registry to set up the connection to Connectware.

Hint: If you have agents stuck in a status other than "Running", you need to delete the stuck pods before a pod with your new configuration will be created.

Code-Sprache: YAML (yaml)This will start a single Connectware agent named “agent”, which will connect to a Connectware installation deployed in the same namespace. Unlock the Client Registry in your Connectware admin UI to connect this agent. Refer to Client Registry — Connectware documentation to learn how to use the Client Registry to connect agents.

You can repeat the same command to apply any changes to your values.yaml file configuration in the future.

Changing the Target Connectware

If you are not deploying the agent in the same Kubernetes namespace, or even inside the same Kubernetes cluster, you need to specify the hostname under which Connectware is reachable for this agent.

In the default configuration, the following network ports on Connectware must be reachable for the agent:

- TCP/443

- TCP/1883

Specify the hostname of Connectware to which the agent connects to by setting the Helm value connectwareHost inside the protocolMapperAgentDefaults context of your values.yaml file. For Connectware deployments in a different Kubernetes namespace this is “connectware.<namespace>”.

Example

licenseKey: <your-connectware-license-key>

protocolMapperAgentDefaults:

connectwareHost: connectware.cybus # adjust to actual hostname of Connectware

Code-Sprache: YAML (yaml)Connectware with Separate control-plane-broker

To connect to a Connectware that uses the separate control-plane-broker, you need to set the Helm value controlPlaneBrokerEnabled to true inside the protocolMapperAgentDefaults section of your values.yaml file.

Example

licenseKey: <your-connectware-license-key>

protocolMapperAgentDefaults:

connectwareHost: connectware.cybus # adjust to actual hostname of Connectware

controlPlaneBrokerEnabled: true

Code-Sprache: YAML (yaml)Note: This adds TCP/1884 to required network ports.

Installing Multiple Agents with a Single Helm Installation

You can use the agent chart to install multiple Connectware agents. Every agent you configure needs to be named using the Helm value name in a collection entry inside the context protocolMapperAgents. This way, the default name “agent” will be replaced by the name you give the agent.

Example

licenseKey: <your-connectware-license-key>

protocolMapperAgentDefaults:

connectwareHost: connectware.cybus # adjust to actual hostname of Connectware

protocolMapperAgents:

- name: bender-robots

- name: welder-robots

Code-Sprache: YAML (yaml)This will deploy two Connectware agents, named “bender-robots” and “welder-robots”, both of which will contact the Client Registry of Connectware inside the Kubernetes namespace “cybus”, as described in Client Registry — Connectware documentation

Prerequisites

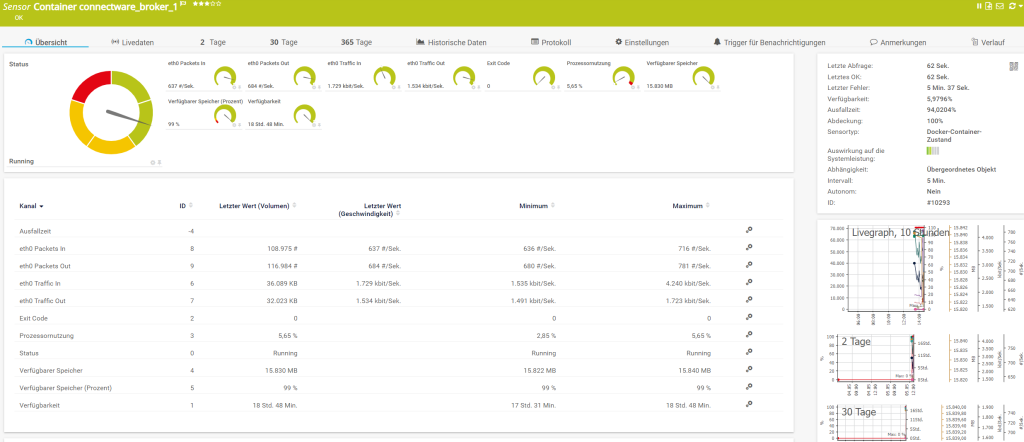

In this lesson we will walk you through all the measures necessary to be taken so PRTG is able to connect to a remote Docker socket.

As a prerequisite, it is necessary to have Docker installed on your system as well as an instance of PRTG with access to that host.

We assume you have at least a basic understanding of Docker and Linux. If you want to refresh your knowledge, we recommend looking at the lesson Docker Basics.

Explaining Linux would be far out of scope for this lesson, but it’s likely an answer to any Linux related question is out there on the internet. Anyway, if you read carefully, the listed commands should work with only minor adjustments.

Introduction

Monitoring your IT infrastructure has a lot of benefits, discovering bottlenecks and gaining insights for predictive measures being only the tip of the iceberg.

PRTG is a solid monitoring solution already present and actively used in a lot of IT departments. Because there are a lot of different monitoring solutions out there, this article is targeted to be compatible with the way PRTG handles Docker Container Monitoring.

PRTG requires the Docker Socket to be exposed to the network, which is not the case on a default setup. The reason for the port not being exposed by default is because of security reasons.

An exposed and unsecured port could lead to a major security issue! Whoever is able to connect to the docker socket could easily gain full control on the system – meaning root access.

Therefore it is really important to handle these configurations with care. The measurement we are going to take is to secure the remote access by using TLS certificates. You can read more about this in the Docker docs.

A guide on the PRTG Docker Container Sensor can be found here.

Certificate Generation

First of all we need to create a bunch of certificates. There are basically two options for doing this.

- The first being to use your private company certificate authority.

- The second is to create the certificates locally.

We are going to use the second option, which means all certificates are going to be self-signed, but that’s totally fine for the purpose of this lesson.

All instructions for the creation of the certificates can be found in the docker docs. To simplify this a little bit, we created a small script that executes all the commands for you.

All the steps below assume you are going to use the script. The script is non-interactive, meaning you do not have to enter anything during execution. The generated certificates won’t be password protected and are valid for 50 years.

Create a directory called .docker in your home directory. This directory is the default directory where the Docker CLI stores all its information.

$ mkdir -p ~/.docker

Code-Sprache: YAML (yaml)Clone the script into the previously created directory.

$ git clone https://gist.github.com/6f6b9a85e136b37cd52983cb88596158.git ~/.docker/

Code-Sprache: YAML (yaml)Change into the directory.

$ cd ~/.docker/

Code-Sprache: YAML (yaml)Make the script executable.

$ chmod +x genCerts.sh

Code-Sprache: YAML (yaml)Then we need to adjust a few things within the script.

$ nano genCerts.sh

Code-Sprache: YAML (yaml)Adjust the HOST to match your hostname and the last IP of the HOSTS string to match your host ip address.

This is how it looks for my setup.

HOST="cybus.io"

HOSTS="DNS:$HOST,IP:127.0.0.1,IP:172.16.0.131"

Code-Sprache: YAML (yaml)Now we are ready to execute the script.

$ sh genCerts.sh

Code-Sprache: YAML (yaml)The output should be somewhat like this.

# Start

# Generate CA private and public keys

Generating RSA private key, 4096 bit long modulus (2 primes)

.................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................++++

...............++++

e is 65537 (0x010001)

Create a server key

Generating RSA private key, 4096 bit long modulus (2 primes)

.++++

..........................................................................++++

e is 65537 (0x010001)

Create certificate signing request

Sign the public key with CA

Signature ok

subject=CN = cybus.io

Getting CA Private Key

Create a client key and certificate signing request

Generating RSA private key, 4096 bit long modulus (2 primes)

.................................................................................................................................++++

...............................................................................................................................................................................................................................................................................................................++++

e is 65537 (0x010001)

Make the key suitable for client authentication

Generate the signed certificate

Signature ok

subject=CN = client

Getting CA Private Key

Remove the two certificate signing requests and extensions config

removed 'client.csr'

removed 'server.csr'

removed 'extfile.cnf'

removed 'extfile-client.cnf'

Code-Sprache: YAML (yaml)To verify all certificates have been generated successfully we inspect the content of the directory.

$ ls

Code-Sprache: YAML (yaml)These files should be present. If there are more files than this, that’s no issue.

ca-key.pem ca.pem ca.srl cert.pem genCerts.sh key.pem server-cert.pem server-key.pem

Code-Sprache: YAML (yaml)The last step is to locate the full path to where the certificates live.

$ pwd

Code-Sprache: YAML (yaml)This is the output in my case. Yours will look a little bit different.

/home/jan/.docker

Code-Sprache: YAML (yaml)Docker Service Configuration

With all the necessary certificates in place, we have to assign them to the docker daemon. We can find the location of the configuration file by checking the status of the docker service.

$ sudo systemctl status docker.service

Code-Sprache: YAML (yaml)As stated in the output, the configuration file is located at /lib/systemd/system/docker.service

● docker.service - Docker Application Container Engine

Loaded: loaded (/lib/systemd/system/docker.service; enabled; vendor preset: enabled)

Active: active (running) since Mon 2022-05-02 10:26:56 EDT; 33s ago

TriggeredBy: ● docker.socket

Docs: https://docs.docker.com Main PID: 468 (dockerd)

Tasks: 9

Memory: 109.2M

CPU: 307ms CGroup: /system.slice/docker.service

└─468 /usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock

Code-Sprache: YAML (yaml)To adjust the configuration to our needs, we are going to open the configuration using sudo privileges.

$ sudo nano /lib/systemd/system/docker.service

Code-Sprache: YAML (yaml)Find the line starting with ExecStart=/usr/bin/dockerd -H fd:// and add the following content to it. Be sure to use the correct path for your setup.

-H tcp://0.0.0.0:2376 --tlsverify=true --tlscacert=/home/jan/.docker/ca.pem --tlscert=/home/jan/.docker/server-cert.pem --tlskey=/home/jan/.docker/server-key.pem

Code-Sprache: YAML (yaml)For me the complete line looks like this.

ExecStart=/usr/bin/dockerd -H fd:// -H tcp://0.0.0.0:2376 --tlsverify=true --tlscacert=/home/jan/.docker/ca.pem --tlscert=/home/jan/.docker/server-cert.pem --tlskey=/home/jan/.docker/server-key.pem --containerd=/run/containerd/containerd.sock

Code-Sprache: YAML (yaml)Flush the changes and restart the docker service.

$ sudo systemctl daemon-reload

$ sudo systemctl restart docker

Code-Sprache: YAML (yaml)Now we can verify our changes did take effect.

$ sudo systemctl status docker.service

Code-Sprache: YAML (yaml)● docker.service - Docker Application Container Engine

Loaded: loaded (/lib/systemd/system/docker.service; enabled; vendor preset: enabled)

Active: active (running) since Tue 2022-05-03 04:56:12 EDT; 2min 32s ago

TriggeredBy: ● docker.socket

Docs: https://docs.docker.com

Main PID: 678 (dockerd)

Tasks: 9

Memory: 40.8M

CPU: 236ms

CGroup: /system.slice/docker.service

└─678 /usr/bin/dockerd -H fd:// -H tcp://0.0.0.0:2376 --tlsverify=true --tlscacert=/home/jan/.docker/ca.pem --tlscert=/home/jan/.docker/server-cert.pem --tlskey=/home/jan/.docker/server-key.pem --containerd=/run/containerd/containerd.sock

Code-Sprache: YAML (yaml)Now we can use the Docker CLI to connect to the Docker Daemon using the specified port. The important part is to use –tlsverify=true as this tells the Docker CLI to use the generated certificates located in our home directory ( ~/.docker).

Remember to adjust the IP address in the second line with your individual one.

$ docker -H 127.0.0.1:2376 --tlsverify=true version

$ docker -H 172.16.0.131:2376 --tlsverify=true version

Code-Sprache: YAML (yaml)This is the output of both commands on my system.

Client: Docker Engine - Community

Version: 20.10.14

API version: 1.41

Go version: go1.16.15

Git commit: a224086

Built: Thu Mar 24 01:48:21 2022

OS/Arch: linux/amd64

Context: default

Experimental: true

Server: Docker Engine - Community

Engine:

Version: 20.10.14

API version: 1.41 (minimum version 1.12)

Go version: go1.16.15

Git commit: 87a90dc

Built: Thu Mar 24 01:46:14 2022

OS/Arch: linux/amd64

Experimental: false

containerd:

Version: 1.5.11

GitCommit: 3df54a852345ae127d1fa3092b95168e4a88e2f8

runc:

Version: 1.0.3

GitCommit: v1.0.3-0-gf46b6ba

docker-init:

Version: 0.19.0

GitCommit: de40ad0

Code-Sprache: YAML (yaml)PRTG

The last and final step is to install the docker sensor inside of PRTG. This should be fairly easy to accomplish by following the provided instructions from https://www.paessler.com/manuals/prtg/docker_container_status_sensor.

Prerequisites

In this lesson we will set up a local Cybus Connectware Instance using Ansible.

As a prerequisite, it is necessary to have Ansible, Docker and Docker Compose installed on your system as well as a valid Connectware License on hand.

Docker shouldn’t be installed using snapcraft!

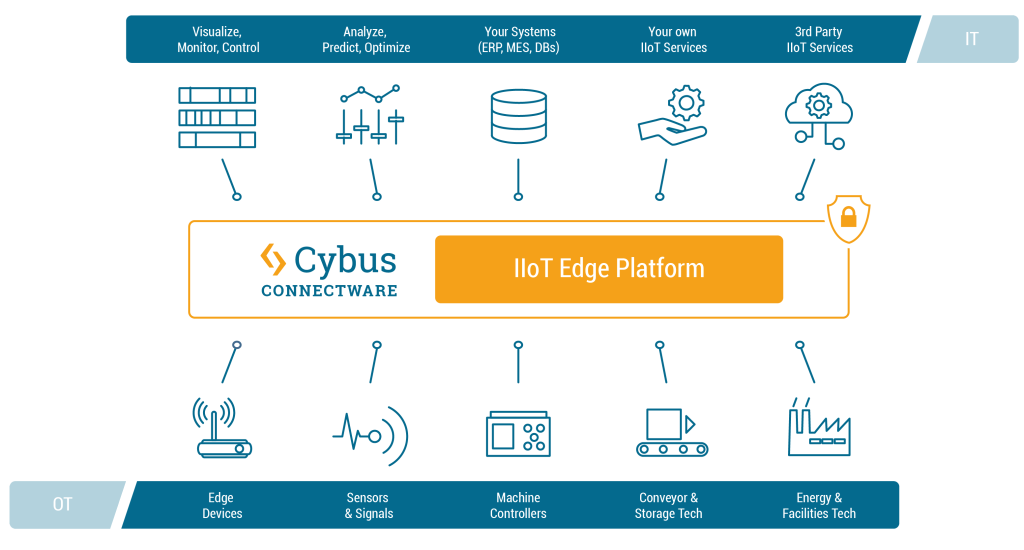

We assume you are already familiar with Cybus Connectware and its service concept. If not, we recommend reading the articles Connectware Technical Overview and Service Basics for a quick introduction. Furthermore this lesson requires basic understanding of Docker and Ansible. If you want to refresh your knowledge, we recommend looking at the lesson Docker Basics and this Ansible Getting started guide.

Introduction

Ansible is an open-source provisioning tool enabling infrastructure as code. Cybus provides a set of custom modules (Collection) exclusively developed to manage every part of a Connectware Deployment, seamlessly integrated into the Ansible workflow. With only a few lines of code you can describe and roll out your whole infrastructure, including services, users and many more.

The collection provides the following modules:

- instance | Manages Connectware Instances

- service | Manages Connectware Services

- role | Manages Connectware Roles

- user | Manages Connectware Users

- agent | Manages Agent Instances

Ansible Collection Installation

First of all we have to make sure that the Connectware Ansible Collection is present on our system. The Collection is available for Download on Ansible Galaxy.

Installing the Collection is fairly easy by using Ansible Tools:

$ ansible-galaxy collection install cybus.connectware

Code-Sprache: YAML (yaml)To get a list of all installed collections you can use the following command:

$ ansible-galaxy collection list

Code-Sprache: YAML (yaml)If you already have installed the collection you can force an update like this:

$ ansible-galaxy collection install cybus.connectware --force

Code-Sprache: YAML (yaml)Writing Our First Playbook

To provide Ansible with all the information that is required to perform the Connectware installation, we need to write a short playbook. Create a empty folder and a file called playbook.yaml with the following content:

- name: Connectware Deployment

hosts: localhost

tasks:

- name: Deploy Connectware

cybus.connectware.instance:

license: ***

install_path: ./

Code-Sprache: YAML (yaml)Taking the file apart we define one play named Connectware Deployment which will be executed on the given hosts. The only host in our case is the localhost.

Then we define all the tasks to be executed. We only have one task called Deploy Connectware. The task takes a module to be executed along with some configuration parameters. For now we only specify parameters for the licence and the install_path. Make sure to replace the *** with your actual licence key. The install_path will be the one you created your playbook in.

There are a lot more parameters to use with this module, but the only required one is license. To see a full list use this command:

$ ansible-doc cybus.connectware.instance

Code-Sprache: YAML (yaml)For running the playbook open a shell in the newly created folder and execute this command (execution may take a few minutes):

$ ansible-playbook playbook.yaml

Code-Sprache: YAML (yaml)The output should look somewhat similar to this.

Notice that the state of the Deploy Connectware task is marked as changed. This indicates that the Connectware is now running and is reachable at https://localhost.

Beside the log output you should now find two new files beside the playbook.yaml. One file is the actual docker compose file managing the Connectware Containers and the other one is holding some additional configurations.

[WARNING]: No inventory was parsed, only implicit localhost is available

[WARNING]: provided hosts list is empty, only localhost is available. Note that the implicit localhost does not match 'all'

PLAY [Connectware Deployment] ***************************************************************************

TASK [Gathering Facts] ***************************************************************************

ok: [localhost]

TASK [Deploy Connectware] ***************************************************************************

changed: [localhost]

PLAY RECAP ***************************************************************************

localhost: ok=2 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

Code-Sprache: YAML (yaml)If you like you can rerun the exact same command, which will result in no operation, since the Connectware already has the desired state. As you can see, this time there is no changed state.

$ ansible-playbook playbook.yaml

[WARNING]: No inventory was parsed, only implicit localhost is available

[WARNING]: provided hosts list is empty, only localhost is available. Note that the implicit localhost does not match 'all'

PLAY [Connectware Deployment] ***************************************************************************

TASK [Gathering Facts] ***************************************************************************

ok: [localhost]

TASK [Deploy Connectware] ***************************************************************************

ok: [localhost]

PLAY RECAP ***************************************************************************

localhost : ok=2 changed=0 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

Code-Sprache: YAML (yaml)Installing a Service

Now that we have a running Cybus Connectware instance, we go a step further and install a Service. First thing we have to do is to create the Service Commissioning File. We will use a very simple Service for demonstration purposes. Create a file called example-service.yml and paste in the following content:

---

description: Example Service

metadata:

name: example_service

resources:

mqttConnection:

type: Cybus::Connection

properties:

protocol: Mqtt

connection:

host: !ref Cybus::MqttHost

port: !ref Cybus::MqttPort

scheme: mqtt

username: !ref Cybus::MqttUser

password: !ref Cybus::MqttPassword

Code-Sprache: YAML (yaml)There is really not much going on here than simply creating a connection to the internal Connectware Broker.

Next we have to enrich our playbook by appending another task like this:

- name: Install Service

cybus.connectware.service:

id: example_service

commissioning_file: ./example-service.yml

Code-Sprache: YAML (yaml)The task uses another module of the collection for managing services. The required parameters are id and commissioning_file.

You can learn more about the module by using this command:

$ ansible-doc cybus.connectware.service

Code-Sprache: YAML (yaml)When executing the playbook again, you should see similar output to this:

$ ansible-playbook playbook.yaml

[WARNING]: No inventory was parsed, only implicit localhost is available

[WARNING]: provided hosts list is empty, only localhost is available. Note that the implicit localhost does not match 'all'

PLAY [Connectware Deployment] ***************************************************************************

TASK [Gathering Facts] ***************************************************************************

ok: [localhost]

TASK [Deploy Connectware] ***************************************************************************

ok: [localhost]

TASK [Install Service] ***************************************************************************

changed: [localhost]

PLAY RECAP ***************************************************************************

localhost: ok=3 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

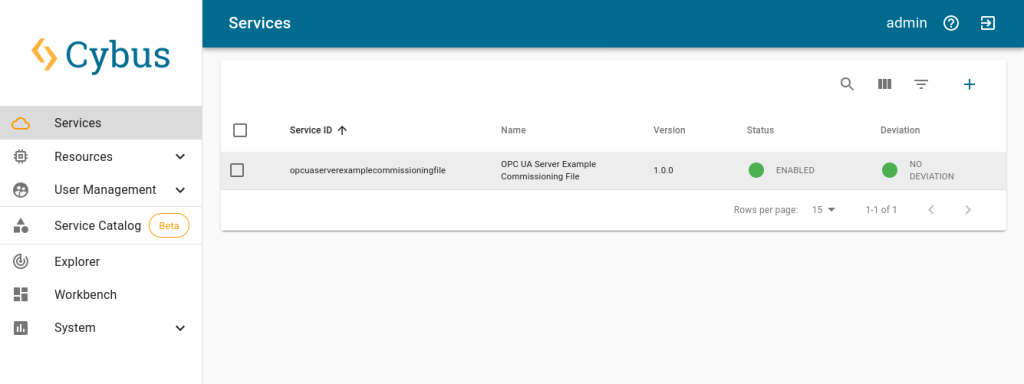

Code-Sprache: YAML (yaml)When visiting the Connectware UI Services page you should now see the service being installed and enabled (https://localhost/admin/#/services).

Stopping Connectware

Every module supports different states. The default state of the instance module for example is started. To stop the Connectware we define another task, use the instance module and define the state to be stopped.

- name: Stopping Connectware

cybus.connectware.instance:

state: stopped

license: ***

install_path: ./

Code-Sprache: YAML (yaml)After executing the playbook once more, the Cybus Connectware should no longer be running.

Next Steps for Using Ansible

All the steps described above are really basic and there is so much more to learn and discover.

The recommended next steps are:

- Ansible Connectware Collection inside Docker #add Link

- Automated Connectware Deployment using GitLab CI/CD and Ansible

Introduction

This quick start guide describes the steps to install the Cybus Connectware onto a Kubernetes cluster.

Please consult the article Installing Cybus Connectware for the basic requirements to run the software, like having access to the Cybus Portal to acquire a license key.

The following topics are covered by this article:

- Prerequisites

- Quick Installation Guide

- The Cybus Connectware Helm Chart

- System requirements, resource limits and permissions

- Specialties

- Deploying Protocol Mapper Agents

Prerequisites

We assume that you are already familiar with the Cybus Portal and that you have obtained a license key or license file. Also see the prerequisites in the article Installing Cybus Connectware.

This guide does not introduce Kubernetes, Docker, containerization or tooling knowledge, we expect the system admin to know about their respective Kubernetes environment, which brings – besides wellknown standards – a certain specific custom complexity, e.g. the choice of certain load balancers, the management environment, storage classes and the like, which are up to the customer’s operations team and should not affect the reliability of Cybus Connectware deployed there, if the requirements are met.

Besides a Kubernetes cluster the following tools and resources are required:

- kubectl CLI

- Helm V3 CLI

- Ability to reach your Kubernetes cluster

- Ability to download Docker images from registry.cybus.io directly or through a mirror/proxy to your Kubernetes cluster nodes, if the internet connection is restricted.

Quick Installation Guide

To be able to start with Cybus Connectware on a Kubernetes cluster, use the prepared helm chart and the following steps:

- Add the public Helm V3 repository to the local configuration using the Helm CLI:

helm repo add cybus https://repository.cybus.io/repository/connectware-helm

Code-Sprache: YAML (yaml)- Update repository and verify available Connectware Helm Chart releases (e.g. 1.1.0)

helm repo update

helm search repo connectware [-l]

Code-Sprache: YAML (yaml)- Create a file called

values.yaml. This file will be used to configure your installation of Connectware. Initially fill this file with this YAML content:

global:

licensekey: <YOUR-CONNECTWARE-LICENSE-KEY>

setImmutableLabels: true

broker:

clusterSecret: <SOME-RANDOM-SECRET-STRING>

Code-Sprache: YAML (yaml)- If your Kubernetes cluster does not specify a default StorageClass that provides

ReadWriteOnceandReadWriteManyaccess modes, please also set the valueglobal.storage.storageClassNameto a StorageClass that does the following:

storage:

storageClassName: “san-storage” # example value

Code-Sprache: YAML (yaml)- Have a look at the default values by extracting them into a file called

default-values.yamland checking if you want to make further adjustments. It is, for example, possible that you need to adjust the resource request/limit values for smaller test clusters. In this case copy and adjust theglobal.podResourcessection fromdefault-values.yamltovalues.yaml.

helm show values cybus/connectware > default-values.yaml

Code-Sprache: YAML (yaml)- Optionally perform a dry-run with debug options to validate all settings. This example shows user supplied values, along with computed values and the rendered template:

helm install <YOURDEPLOYMENTNAME> cybus/connectware -f ./values.yaml --dry-run --debug -n<YOURNAMESPACE> --create-namespace

Code-Sprache: YAML (yaml)Example

helm install connectware cybus/connectware -f ./values.yaml --dry-run --debug -ncybus --create-namespace

Code-Sprache: YAML (yaml)- Start the installation through Helm:

helm install <YOURDEPLOYMENTNAME> cybus/connectware -f ./values.yaml --n<YOURNAMESPACE> --create-namespace

Code-Sprache: YAML (yaml)- Subsequent updates can be executed by:

helm upgrade <YOURDEPLOYMENTNAME> cybus/connectware -f ./values.yml -n<YOURNAMESPACE>

Code-Sprache: YAML (yaml)Important values.yml Parameters

When taking a look at the default-values.yaml file you should check out these important values within the global section:

- The

licensekeyvalue handles the licensekey of the Connectware installation. This needs to be a production license key. This parameter is mandatory unless you setlicenseFile - The

licenseFilevalue is used to activate Connectware in offline mode. The content of a license file downloaded from the Cybus Portal has to be set (this is a single line of a base64 encoded json object) - The current version of the Helm chart always installs the most recent version of Connectware. You can override the Connectware

imagesource and version using the image section. - The

brokersection specifies MQTT broker related settings:broker.clusterSecret: the authentication secret for the MQTT broker cluster. Note: The cluster secret for the broker is not a security feature. It is rather a cluster ID so that nodes do not connect to different clusters that might be running on the same network. Make sure that thecontrolPlaneBroker.clusterSecretis different from thebroker.clusterSecret.broker.replicaCount: the number of broker instances

- The

controlPlaneBrokersection specifies MQTT broker related settings:controlPlaneBroker.clusterSecret: the authentication secret for the MQTT broker cluster. Note: The cluster secret for the broker is not a security feature. It is rather a cluster ID so that nodes do not connect to different clusters that might be running on the same network. Make sure that thecontrolPlaneBroker.clusterSecretis different from thebroker.clusterSecret.controlPlaneBroker.replicaCount: the number of broker instances- The

controlPlaneBrokeris optional. To activate it, typecontrolPlaneBroker.enabled: true. This creates a second broker cluster that handles only internal communications within Connectware.

- The

loadBalancersection allows pre-configuration for a specific load balancer - The

podResourcesset of values allows you to configure the number of CPU and memory resources per pod; by default some starting point values are set, but depending on the particular use case they need to be tuned in relation to the expected load in the system, or reduced for test setups - The

protocolMapperAgentssection allows you to configure additional protocol-mapper instances in Agent mode. See the documentation below for more details

Important Notes on Setting Values When Upgrading to Newer Versions of the Chart

Helm allows setting values by both specifying a values file (using -f or --values) and the --set flag. When upgrading this chart to newer versions you should use the same arguments for the Helm upgrade command to avoid conflicting values being set for the Chart; this is especially important for the value of global.broker.clusterSecret, which would cause the nodes not to form the cluster correctly, if not set to the same value used during install or upgrade.

For more information about value merging, see the respective Helm documentation.

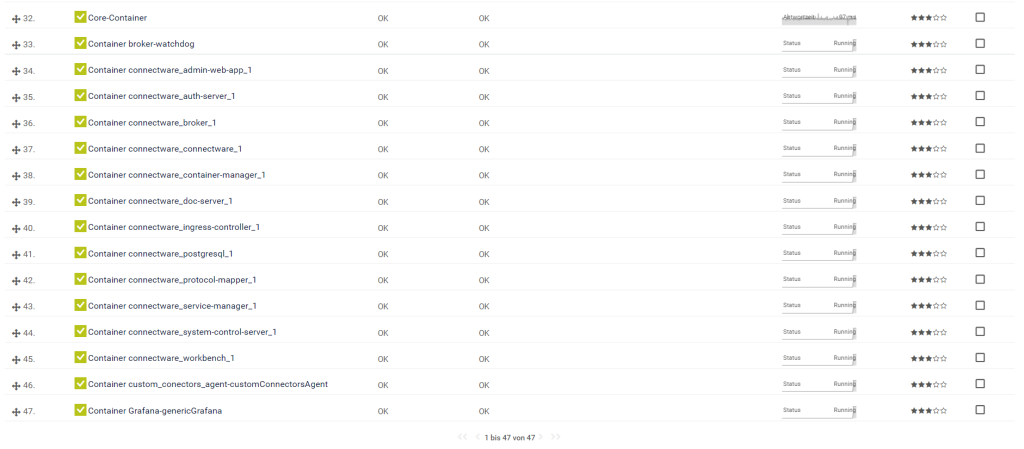

Using Connectware

After following all the steps above Cybus Connectware is now installed. You can access the Admin UI by opening your browser and entering the Kubernetes application URL https://<external-ip> with the initial login credentials:

Username: admin

Password: admin

To determine this data, the following kubectl command can be used:

kubectl get svc connectware --namespace=<YOURNAMESPACE> -o jsonpath={.status.loadBalancer.ingress}

Code-Sprache: YAML (yaml)Should this value be empty your Kubernetes cluster load-balancer might need further configuration, which is beyond the scope of this document, but you can take a first look at Connectware by port-forwarding to your local machine:

kubectl --namespace=<YOURNAMESPACE> port-forward svc/connectware 10443:443 1883:1883 8883:8883

Code-Sprache: YAML (yaml)You can now access the admin UI at: https://localhost:10443/

If you would like to learn more how to use Connectware, check out our docs at https://docs.cybus.io/ or see more guides here.

The Cybus Connectware Helm Chart

The Kubernetes version of Cybus Connectware comes with a Helm Umbrella chart, describing the instrumentation of the Connectware images for deployment in a Kubernetes cluster.

It is publicly available in the Cybus Repository for download or direct use with Helm.

System Requirements

Cybus Connectware expects a regular Kubernetes cluster and was tested for Kubernetes 1.22 or higher.

This cluster needs to be able to provide load-balancer ingress functionality and persistent volumes in ReadWriteOnce and ReadWriteMany access modes provided by a default StorageClass unless you specify another StorageClass using the global.storage.storageClassName Helm value.

For Kubernetes 1.25 and above Connectware needs a privileged namespace or a namespace with PodSecurityAdmission labels for warn mode. In case of specific boundary conditions and requirements in customer clusters, a system specification should be shared to evaluate them for secure and stable Cybus Connectware operations.

Resource Limits

Connectware specifies default limits for CPU and memory in its Helm values that need to be at least fulfilled by the Kubernetes cluster for production use. Variations need to be discussed with Cybus, depending on the specific demands and requirements in the customer environment, e.g., the size of the broker cluster for the expected workload with respect to the available CPU cores and memory.

Smaller resource values are often enough for test or POC environments and can be adjusted using the global.podResources section of the Helm values.

Permissions

In order to run Cybus Connectware in Kubernetes clusters, two new RBAC roles are deployed through the Helm chart and will provide Connectware with the following namespace permissions:

Permissions for the Broker

| resource(/subresource)/action | permission |

|---|---|

| pods/list | list all containers get status of all containers |

| pods/get pods/watch | inspect containers |

| statefulsets/list | list all StatefulSets get status of all StatefulSets |

| statefulsets/get statefulsets/watch | inspect StatefulSets |

Permissions for the Container/Pod-Manager

| resource(/subresource)/action | permission |

|---|---|

| pods/list | list all containers get status of all containers |

| pods/get pods/watch | inspect containers |

| pods/log/get pods/log/watch | inspect containers get a stream of container logs |

| deployments/create | create Deployments |

| deployments/delete | delete Deployments |

| deployments/update deployments/patch | to restart containers (since we rescale deployments) |

Specialties

The system administrator needs to be aware of certain characteristics of the Connectware deployment:

- The Helm Chart requires a licensekey to download images from the Cybus registry, which requires a proxy or direct connection to the registry.cybus.io host.

- To download a license file for the key a connection to portal.cybus.io is required.

- This can be skipped for an offline setup by defining the licenseFile parameter in the Helm chart values (see

licenseFileabove)

- This can be skipped for an offline setup by defining the licenseFile parameter in the Helm chart values (see

- Dynamic scaling is just possible for the broker and control-plane-broker StatefulSet.

- Multiple ProtocolMapper instances are possible by explicit definition of additional protocolMapperAgents in the Helm chart values (see below)

- In case of a MetalLB the values.yaml can define an addressPoolName to assign an external IP address range managed by MetalLB through the Helm value

global.loadBalancer.addressPoolNameor by setting themetallb.universe.tf/address-poolannotation using theglobal.ingress.service.annotationsHelm value

Deploying Protocol-Mapper Agents

The default-values.yaml file contains a protocolMapperAgents section representing a list of Connectware agents to deploy. The general configuration for these agents is the same as described in the Connectware documentation.

You can copy this section to your local values.yaml file to easily add agents to your Connectware installation

The only required property of the list items is name; if only this property is specified the chart assumes some defaults:

- CYBUS_AGENT_NAME is set to the same value as that of

name - CYBUS_MQTT_HOST is set to

connectwarewhich is the DNS name of Connectware.

Note: this DNS name must be a DNS name reachable by each of the agents being deployed. In case of some deployment distribution strategy of the target Kubernetes cluster this name has to be configured accordingly. storageSizeis set to 40 MB by default. The agents use some local storage which needs to be configured based on each use case. If a larger number of services is going to be deployed, this value should be specified and set to bigger values.

You can check out the comments of that section in default-values.yaml to see further configuration options.

You can find further information in the general Connectware Agent documentation.

Prerequisites

In this lesson, we will send data from Cybus Connectware to an Elasticsearch Cluster.

As a prerequisite, it is necessary to set up the Connectware instance and the Elasticsearch instance to be connected. In case of joining a more advanced search infrastructure, a Logstash instance between Connectware and the Elasticsearch cluster may be useful.

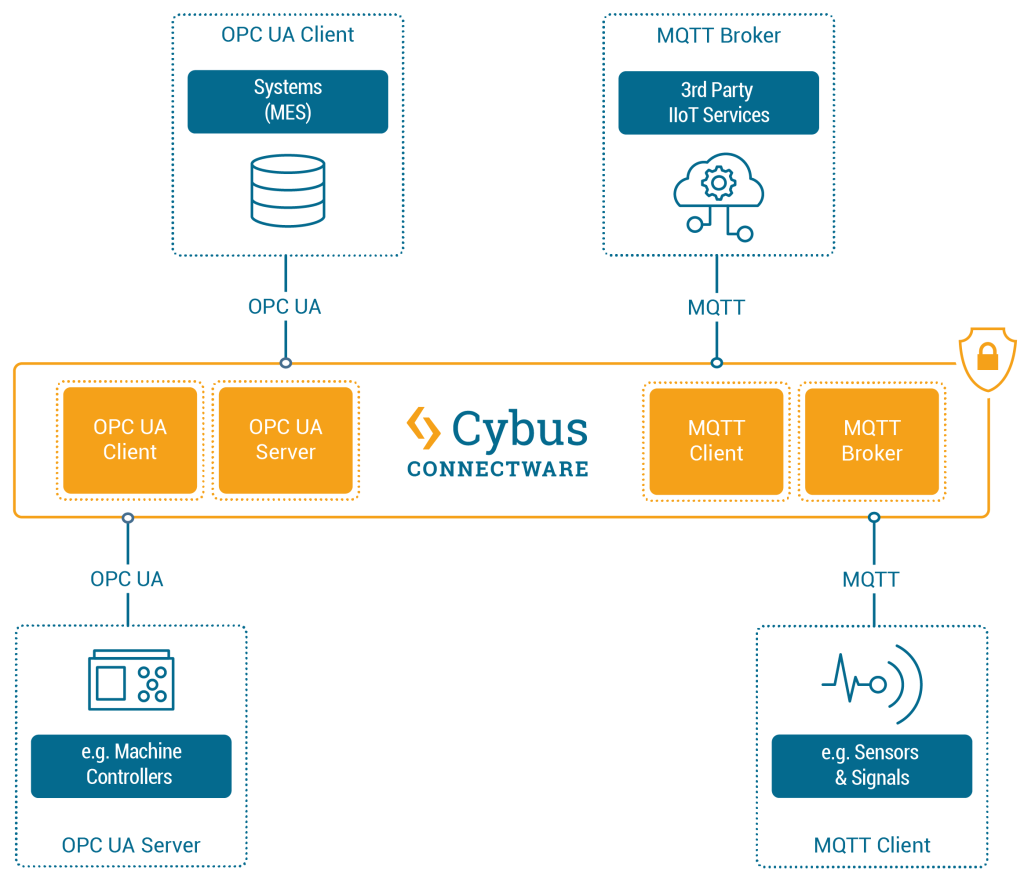

We assume you are already familiar with Cybus Connectware and its service concept. If not, we recommend reading the articles Connectware Technical Overview and Service Basics for a quick introduction. Furthermore, this lesson requires basic understanding of MQTT and how to publish data on an MQTT topic. If you want to refresh your MQTT knowledge, we recommend looking at the lessons MQTT Basics and How to connect an MQTT client to publish and subscribe data.

Introduction on Elasticsearch

This article provides general information about Elasticsearch and its role in the Industrial IoT context along with a hands-on section about the Cybus Connectware integration with Elasticsearch.

If you are already familiar with Elasticsearch and its ecosystem, jump directly to the hands-on section. See: Using Filebeat Docker containers with Cybus Connectware.

The article concludes by describing some aspects of working with relevant use cases for prototyping, design decisions and reviewing the integration scenario.

What is Elasticsearch?

Elasticsearch is an open-source enterprise-grade distributed search and analytics engine built on Apache Lucene. Lucene is a high-performance, full-featured text search engine programming library written in Java. Since its first release in 2010, Elasticsearch has become widely used for full-text search, log analytics, business analytics and other use cases.

Elasticsearch has several advantages over classic databases:

- It can be used to search for all kinds of documents using modern search engine capabilities.

- Elasticsearch supports a JSON-based domain-specific language (DSL), a RESTful API, TLS for encrypted communication, multitenancy and role-based access control for cluster APIs and indices.

- It is highly scalable and supports near real-time search.

- It’s distributed, which means that indices can be divided into shards with zero or more replicas while routing and rebalancing operations are performed automatically.

- It supports real-time GET requests, which makes it suitable as a NoSQL datastore.

Mastering these features is a known challenge, since working with Elasticsearch and search indices in general can become quite complex depending on the use case. The operational effort is also higher, but this can be mitigated by using managed Elasticsearch clusters offered by different cloud providers.

Elasticsearch inherently comes with a log aggregator engine called Logstash, a visualization and analytics platform called Kibana and a collection of data shippers called Beats. These four products are part of the integrated solution called the “Elastic Stack”. Please follow the links above to learn more about it.

Industrial IoT with the Elastic Stack

When it comes to Industrial IoT, we speak about collecting, enriching, normalizing and analyzing huge amounts of data ingested at a high rate even in smaller companies. This data is used to gain insights into production processes and optimize them, to build better products, to perform monitoring in real time, and last but not least, to establish predictive maintenance. To benefit from this data, it needs to be stored and analyzed efficiently, so that queries on that data can be made in near real time.

Here a couple of challenges may arise. One of them could be the mismatch between modern data strategies and legacy devices that need to be integrated into an analytics platform. Another challenge might be the need to obtain a complete picture of the production site, so that many different devices and technologies can be covered by an appropriate data aggregation solution.

Some typical IIoT use cases with the Elastic Stack include:

- Predictive analytics leading to predictive maintenance (which allows better maintenance plans, cost savings and less outages in the production process)

- Reducing reject rate in the production process

- Ensuring shop floor security

- Real time machine status monitoring

Elastic Stack has become one of several solutions for realizing such use cases in a time and cost efficient manner, and the good thing is that Elasticsearch can be easily integrated into the shop floor with Cybus Connectware.

Cybus Connectware is an ideal choice for this because its protocol abstraction layer is not only southbound protocol agnostic, supporting complex shop floor environments with many different machines, but also agnostic to the northbound systems. This means that customers can remain flexible and realize various use cases according to their specific requirements. For example, migrating a Grafana-based local monitoring system to Kibana, an Elastic Stack-based real time monitoring Dashboard, is a matter of just a few changes.

Connectware & Elasticsearch Integration

The learning curve for mastering Elasticsearch is steep for users who try it for the first time. Maintaining search indices and log shippers can also be complex for some use cases. In those cases, it might be easier and more efficient to integrate machines into an IIoT edge solution, such as Connectware.

Here are some benefits of using Cybus Connectware at the edge to stream data to Elasticsearch:

- Complex machine data is made available in a standardized format for use in Elasticsearch

- As an OT/IT gateway, Connectware allows full control over all data transmitted from machines to the northbound systems. This way it covers important aspects of data governance

- Changes in specified data formats can easily be covered by using a low-code approach for mappings and transformation rules in Connectware service commissioning files

- In case of connectivity issues to endpoints like an Elasticsearch cluster, machine data is cached and transmitted without loss after the connection was restored

- Different targets for machine data can be added at any time to existing services, so that smooth data migration to different and multiple northbound endpoint targets is possible

Nevertheless, when ingesting data the Filebeat and Logstash data processing features may also be very useful for normalizing data for all data sources, not just IIoT data from OT networks.

Before proceeding further, you should first obtain access credentials for an existing Elasticsearch cluster, or set up a new one. For this, follow the instructions below:

- Getting Started with Elasticsearch

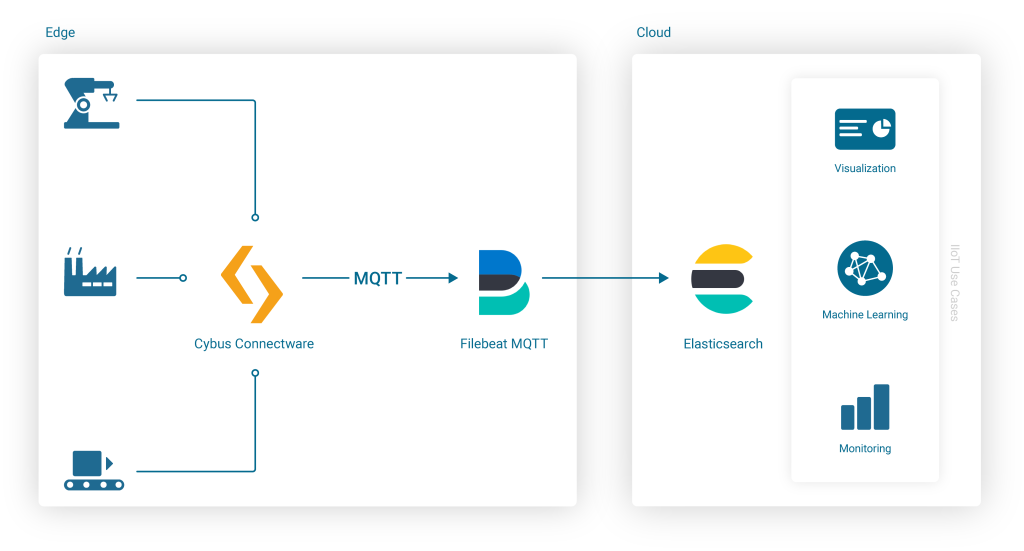

The simplest and most reliable way of communication when integrating Cybus Connectware with Elasticsearch is the MQTT Input for a Filebeat instance. An additional advantage of the Connectware MQTT connector is built-in data buffering, which means that data is stored locally when there is temporary connection failure between the Filebeat and the Elasticsearch Cluster:

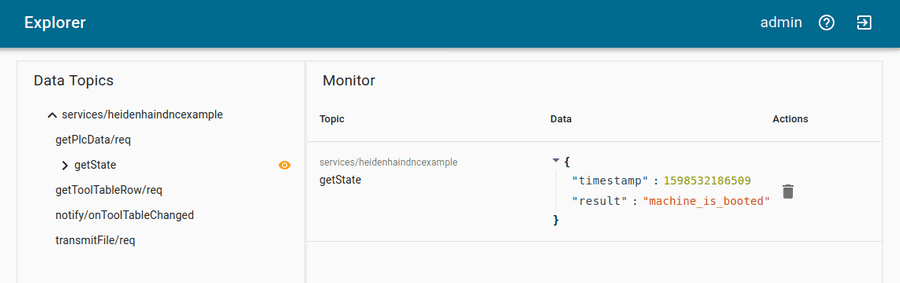

Using Filebeat Docker Containers with Cybus Connectware

Embedding the Filebeat Docker Image into Connectware is easy because Connectware comes with an integrated interface for running Edge Applications. Once started, the docker container connects to the integrated Connectware Broker to fetch and process the data of interest.

All you have to do is to create a Connectware Service by writing a Commissioning File and install it on the Connectware instance. To learn more about writing Commissioning Files and Services, head over to the Learn Article called Service Basics.

Now let’s get straight to the point and start writing the Commissioning File.

Step 1. Create a Basic Template File Called filebeat.yml

This is the basic structure of a commissioning file:

---

description: Elastic Filebeat reading MQTT Input

metadata:

name: Filebeat

parameters:

definitions:

resources:

Code-Sprache: YAML (yaml)Step 2. Add Container Resource for the Filebeat

Add a Cybus::Container Resource for the filebeat to the resources section in the template. This will later allow you to run the Container when installing and enabling the Service, using the docker image from docker.elastic.co directly:

resources:

filebeat:

type: Cybus::Container

properties:

image: docker.elastic.co/beats/filebeat:7.13.2

volumes:

- /var/run/docker.sock:/var/run/docker.sock:ro

Code-Sprache: YAML (yaml)Step 3. Specify the Filebeat Configuration

When starting the filebeat container, various variables must be configured correctly. In this example, these variables should not be integrated into a specialized container image. Instead, the variables should be configured “on the fly” when starting the standard container image from within Connectware, so that the entire configuration is stored in a single commissioning file. For this purpose, all configuration settings of the filebeat container are specified in the helper section of the commissioning file, defining a variable called CONFIG inside of the file’s definitions section:

definitions:

CONFIG: !sub |

filebeat.config:

modules:

path: /usr/share/filebeat/modules.d/*.yml

reload.enabled: false

filebeat.inputs:

- type: mqtt

hosts:

- tcp://${Cybus::MqttHost}:${Cybus::MqttPort}

username: admin

password: admin

client_id: ${Cybus::ServiceId}-filebeat

qos: 0

topics:

- some/topic

setup.ilm.enabled: false

setup.template.name: "some_template"

setup.template.pattern: "my-pattern-*"

output.elasticsearch:

index: "idx-%{+yyyy.MM.dd}-00001"

cloud.id: "elastic:d2V***"

cloud.auth: "ingest:Xbc***"

Code-Sprache: YAML (yaml)Step 4. Tweak the Filebeat Container Behavior on Startup

Now that the Filebeat configuration is set up, the container resource filebeat mentioned above needs to be extended in order to use this configuration on startup (in this and the following examples, the top-level headline resources: is skipped for brevity):

filebeat:

type: Cybus::Container

properties:

image: docker.elastic.co/beats/filebeat:7.13.2

entrypoint: [""]

command:

- "/bin/bash"

- "-c"

- !sub 'echo "${CONFIG}" > /tmp/filebeat.docker.yml && /usr/bin/tini -s -- /usr/local/bin/docker-entrypoint -c /tmp/filebeat.docker.yml -environment container'

volumes:

- /var/run/docker.sock:/var/run/docker.sock:ro

Code-Sprache: YAML (yaml)Step 5. Introduce Parameters for Access Credentials

The filebeat container needs access credentials to set up the cloud connection correctly. Those credentials should not be written into the file as hard-coded values though. Credentials as hard-coded values should be avoided not only for security reasons, but also to make the commissioning file re-usable and re-configurable for many more service operators. To easily achieve this, we are going to use parameters.

In the parameters section we are creating two parameters of type string:

parameters:

filebeat-cloud-id:

type: string

description: The cloud id string, for example elastic:d2V***

filebeat-cloud-auth:

type: string

description: The cloud auth string, for example ingest:Xbc***

Code-Sprache: YAML (yaml)These parameters are now ready to be used in our configuration. During the installation of the service, Connectware will ask us to provide the required values for these parameters.

To use the parameters in the configuration, the following lines in the Filebeat configuration (the CONFIG definition from above) need to be adapted:

cloud.id: "${filebeat-cloud-id}"

cloud.auth: "${filebeat-cloud-auth}"

Code-Sprache: YAML (yaml)Step 6. Replace Broker Credentials with Connectware Parameters

The filebeat container is using access credentials not only for the cloud connection but also for the local input connection, which is the connection to the Connectware MQTT broker. Those access credentials have been set to the default credentials (admin/admin) in the definition above, which now need to be adapted to the actual non-default credentials. For your convenience, Connectware already has Global Parameters that are replaced by the current credentials of the MQTT broker. So the following lines in the Filebeat configuration (the CONFIG definition from above) need to be adapted, too:

username: ${Cybus::MqttUser}

password: ${Cybus::MqttPassword}

Code-Sprache: YAML (yaml)Step 7. Configure Read Permissions for Topics

Finally the defaultRole for this service requires additional read permissions for all MQTT topics which the service should consume. To grant these additional privileges, another resource should be added:

resources:

defaultRole:

type: Cybus::Role

properties:

permissions:

- resource: some/topic

operation: read

context: mqtt

Code-Sprache: YAML (yaml)Final Service Commissioning File

In the end, the entire service commissioning file should look like this:

---

description: Elastic Filebeat reading MQTT Input

metadata:

name: Filebeat

parameters:

filebeat-cloud-id:

type: string

description: The cloud id string, for example elastic:d2V***

filebeat-cloud-auth:

type: string

description: The cloud auth string, for example ingest:Xbc***

definitions:

# Filebeat configuration

CONFIG: !sub |

filebeat.config:

modules:

path: /usr/share/filebeat/modules.d/*.yml

reload.enabled: false

filebeat.inputs:

- type: mqtt

hosts:

- tcp://${Cybus::MqttHost}:${Cybus::MqttPort}

username: ${Cybus::MqttUser}

password: ${Cybus::MqttPassword}

client_id: ${Cybus::ServiceId}-filebeat

qos: 0

topics:

- some/topic

setup.ilm.enabled: false

setup.template.name: "some_template"

setup.template.pattern: "my-pattern-*"

output.elasticsearch:

index: "idx-%{+yyyy.MM.dd}-00001"

cloud.id: "${filebeat-cloud-id}"

cloud.auth: "${filebeat-cloud-auth}"

resources:

# The filebeat docker container

filebeat:

type: Cybus::Container

properties:

image: docker.elastic.co/beats/filebeat:7.13.1

entrypoint: [""]

command:

- "/bin/bash"

- "-c"

- !sub 'echo "${CONFIG}" > /tmp/filebeat.docker.yml && /usr/bin/tini -- /usr/local/bin/docker-entrypoint -c /tmp/filebeat.docker.yml -environment container'

volumes:

- /var/run/docker.sock:/var/run/docker.sock:ro

# Gaining privileges

defaultRole:

type: Cybus::Role

properties:

permissions:

- resource: some/topic

operation: read

context: mqtt

Code-Sprache: YAML (yaml)This commissioning file can now be installed and enabled, which will also start the filebeat container and set up its connections correctly. However, there is probably no input data available yet, but we will get back to this later. Depending on the input data, an additional structure should be prepared for useful content in Elasticsearch, which is described in the next section.

Improving Attributes for More Suitable Content Structure in Elasticsearch

The first contact with the Elasticsearch cluster can be verified by sending some message to the topic to which the Filebeat MQTT inputs are subscribed (here: “some/topic”) and reviewing the resulting event in Kibana.

Once this is done, a service integrator may identify several elements of the created JSON document that need to be changed. The deployed Connectware service commissioning file allows us to ship incoming MQTT messages in configured topics to the Elasticsearch cluster as a JSON document with certain meta data that an operator may want to change to improve the data source information.

For example, a message sent using the service described above contains multiple fields with identical values, in this case agent.name, agent.hostname and host.name. This is due to the naming convention for container resources in a service commissioning files described in the Connectware Container Resource. As the ServiceId is “filebeat”, and the container resource is named “filebeat” too, the resulting container name, hostname and agent name in the transmitted search index documents are “filebeat-filebeat”, which looks as follows:

...

"fields": {

...

"agent.name": [

"filebeat-filebeat"

],

"host.name": [

"filebeat-filebeat"

],

...

"agent.hostname": [

"filebeat-filebeat"

],

...

Code-Sprache: YAML (yaml)To get appropriate names in the search index for further evaluation and post processing, either change the serviceId and/or container resource name in the service commissioning file, or use Filebeat configuration options to set an alternative agent.name (by default is derived from the hostname, which is the container hostname created by Connectware). Be aware that the maximum number of characters for the clientId in Filebeat mqtt.input configuration is 23.

Example

Change both the service name (serviceId) and the container resource name to identify the respective device as the data source, and redeploy the service commissioning file:

...

metadata:

name: Shopfloor 1

...

resources:

# The filebeat docker container

filebeat_Machine_A_032:

...

Code-Sprache: YAML (yaml)In addition to this, the Filebeat configuration can be modified slightly to set the agent.name appropriately along with some additional tags to identify our edge data sources and data shipper instance (is useful to group transactions sent by this single Beat):

...

definitions:

CONFIG: !sub

...

name: "shopfloor-1-mqttbeat"

tags: [ "Cybus Connectware", "edge platform", "mqtt" ]

...

Code-Sprache: YAML (yaml)This leads to improved field values in the search index, so that transactions can be better grouped in the search index, such as this:

...

"fields": {

...

"agent.name": [

"shopfloor-1-mqttbeat"

],

"host.name": [

"shopfloor-1-mqttbeat"

],

...

"agent.hostname": [

"shopfloor1-filebeat_Machine_A_032"

],

...

"tags": [

"Cybus Connectware",

"edge platform",

"mqtt"

],

...

Code-Sprache: YAML (yaml)Providing Machine Data for Elasticsearch

Using Cybus Connectware offers extensive flexibility in mapping devices, configuring pre-processing rules and adding many different resources. It is up to the customer to define the requirements, so that a well-architected set of services can be derived for the Connectware instance.

To stream machine data collected by Connectware to Elasticsearch, existing MQTT topics can be subscribed by the Filebeat container. Alternatively, the Filebeat container can subscribe to MQTT topics that contain specific payload transformation. For instance, a normalized payload for an Elasticsearch index specifies an additional timestamp or specific data formats.

The advantage of using Connectware to transmit data to Elasticsearch is that it supports a lightweight rules engine to map data from different machines to Filebeat by just working with MQTT topics, for example:

resources:

# mapping with enricher pattern for an additional timestamp

machineDataMapping:

type: Cybus::Mapping

properties:

mappings:

- subscribe:

topic: !sub '${Cybus::MqttRoot}/machineData/+field'

rules:

- transform:

expression: |

(

$d := { $context.vars.field: value };

$merge(

[

$last(),

{

"coolantLevel": $d.`coolantLevel`,

"power-level": $d.`power-level`,

"spindleSpeed": $d.`spindleSpeed`,

"timestamp": timestamp

}

]

)

)

publish:

topic: 'some/topic'

Code-Sprache: YAML (yaml)A reasonable structural design of related Connectware service commissioning files depends on the number of machines to connect, their payload, complexity of transformation and the search index specifications in the Elasticsearch environment. See the Github project for a more advanced example concerning machine data transformation.

To explain these settings in detail, Cybus provides a complete Connectware documentation and Learn articles like Service Basics.

Discover, Visualize, and Analyze Data in Elasticsearch

What has been added to the original Filebeat configuration is the typical task of a service operator connecting shopfloor devices and organizing respective resources in a Connectware service commissioning file. The service operator has further options to decompose this file to multiple files to optimize the deployment structure in this Low-code/No-code environment for their needs. Contact Cybus to learn more about good practices here.

Now that the data is transmitted to the Elasticsearch cluster, further processing is up to the search index users. The Elastic Stack ecosystem provides tools for working with search indices created from our data, such as simple full text search with Discovery, Kibana visualizations or anomaly detection and so on.

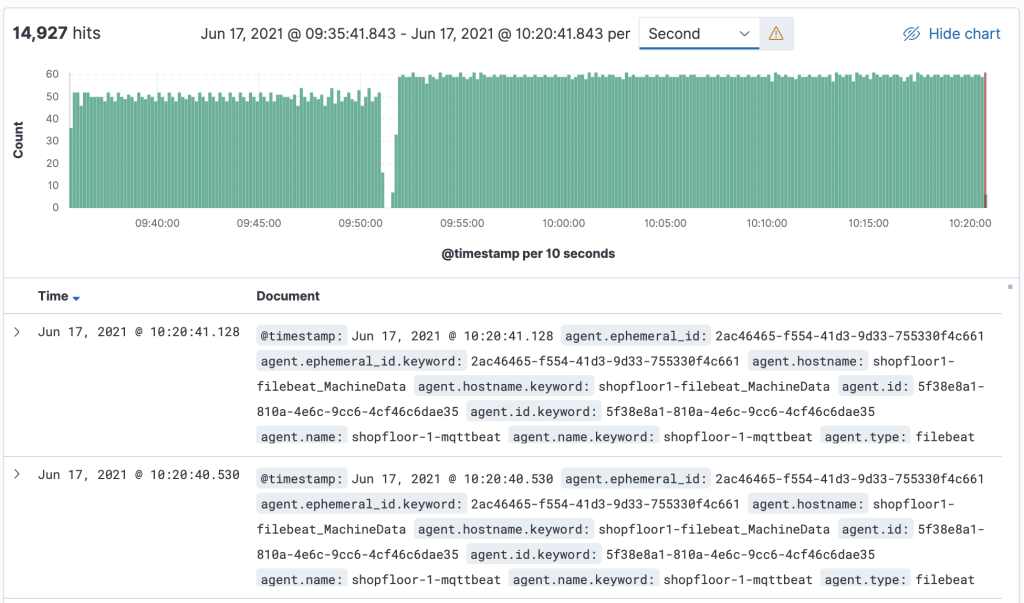

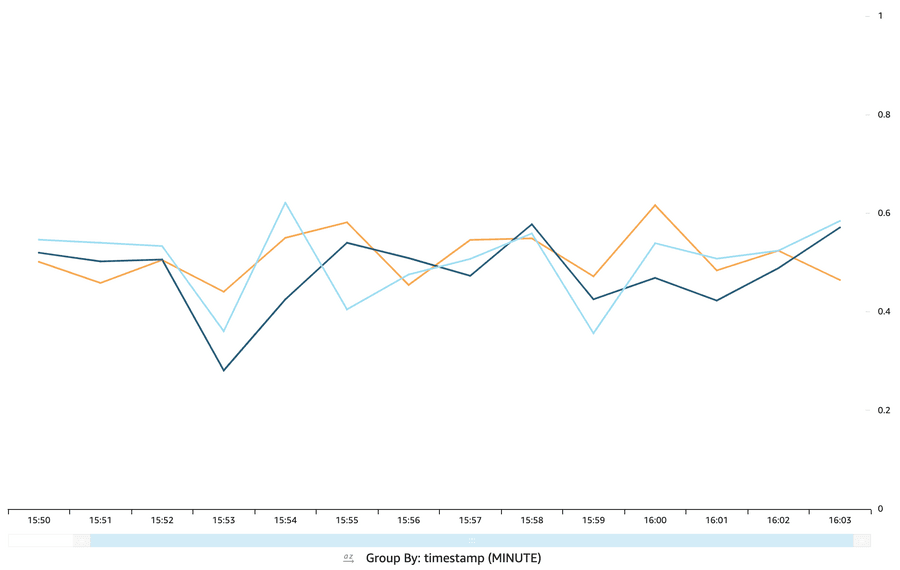

The message is transmitted as a message string and will be stored as a JSON document with automatically de-composed payload and associated metadata for the search index. A simple discovery of that continuously collected data shows something like this:

Where to Go from Here

This lesson offered a brief introduction into the integration of Cybus Connectware with Elasticsearch using the Connectware built-in MQTT connector and the FileBeat with MQTT Input in a service commissioning file.

Additionally, Cybus provides sample service commissioning files and some other technical details in the Github project How to integrate Elasticsearch with Connectware.

As a next step, you can use Cybus Connectware to organize data ingestion from multiple machines for its further use with the Elastic Stack.

Introduction

In this lesson you will learn how to install Cybus Connectware directly onto a Linux system. The following topics are covered by this article:

- Acquiring a license

- Installing Cybus Connectware

- Updating Cybus Connectware

- Changing SSL certificates

- Removing Connectware

Prerequisites for Installing Cybus Connectware

This lesson assumes that you already have an account for the Cybus Portal. If you have no valid credentials, please contact our sales team.

If you want to refresh your knowledge of Docker before starting this lesson see Docker Basics Lesson.

Docker and Docker Compose must also be installed and running on your host. During the installation an internet connection is required to download Docker images fromregistry.cybus.io.

If Docker is not installed, start here:

If Docker Compose is not installed, then see here https://docs.docker.com/compose/install/

Acquire your License Key

During the installation you will be asked to enter your Connectware license key. Follow these steps to obtain your license key.

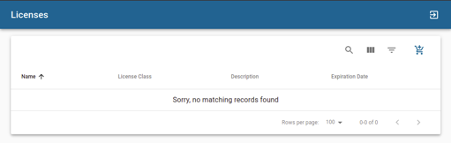

- Go to the Cybus Portal and enter your Username and Password.

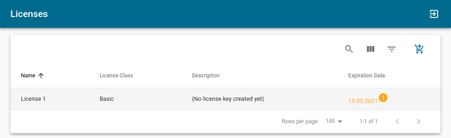

- Select Licenses from the menu. This will take you to an overview of all Connectware licenses currently available to you.

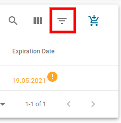

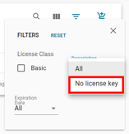

- Select the license you want to use to install Connectware by clicking on that license. Preferably, select the one whose description specifies No license key created yet. Furthermore, you can apply a filter and select No license key in the description.

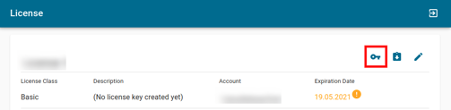

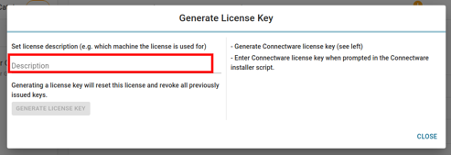

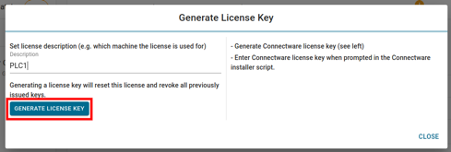

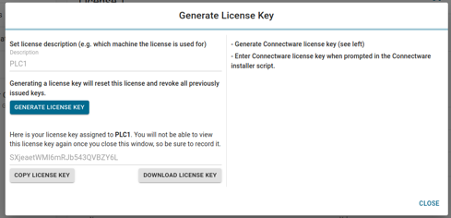

- Click Download License Key in the upper right corner.

- You can now generate a license key by setting a description for the license and clicking on Generate License Key. The generated license key will be displayed and can be copied to the clipboard for later use. Please make sure to keep the license key as it cannot be viewed after closing the window. If the key is lost you have to repeat this step to create a new license key for the selected appliance, which invalidates any old and, under circumstances, already deployed license key. You are now prepared for the installation.

Installing Cybus Connectware

Installing Connectware is made easy using the prebuilt installer script provided by Cybus. To download and use the script follow the steps below.

Prepare Installer Script

- Download the installer script for the desired version of Connectware on your host system. This can be found at

download.cybus.io/<VERSION>/connectware-online-installer.sh. In the example below we will use the latest version of Connectware.

$ wget -O ./connectware-online-installer.sh https://download.cybus.io/latest/connectware-online-installer.sh

Code-Sprache: YAML (yaml)- Optional: It is a security risk to run bash scripts downloaded directly from the web. So feel free to open the installer script and check its contents.

$ cat connectware-online-installer.sh

Code-Sprache: YAML (yaml)- We now need to make the script executable so it can be run as a script.

$ chmod +x ./connectware-online-installer.sh

Code-Sprache: YAML (yaml)The installer script is now ready to be run.

First Time Installation

- Run installer script. Please note the installer script does not need sudo privileges to run unless you want to run Connectware as a systemd service (recommended) or install directory requires additional permissions. In this example we will be installing Connectware as a systemd service so we will run it with sudo.

$ sudo ./connectware-online-installer.sh

Code-Sprache: YAML (yaml)- The version of Connectware will be printed with a greeting Please confirm that the version is correct and press enter.

- You will now be asked for your desired target directory for installation. In this example we will press enter to go with the default path:

/opt/connectware - The installer script will then run preflight checks to make sure that all system requirements are met. If they are not then please check the prerequisites section at the beginning of this lesson.

If all requirements are met then you should see the following output.

Running preflight checks.

=========================

Validating write permission to installation location /opt/connectware: [OK]

Checking whether this system has systemd: [YES]

Validating required utility installation: [OK]

Validating Cybus docker-registry connection: [OK]

Validating Docker installation: [OK]

Validating Docker Compose installation: [OK]

Validating that no former Connectware is running: [OK]

Preflight checks finished successfully!

Code-Sprache: YAML (yaml)- You will now be prompted for your Cybus license key. If your credentials are valid then you should see the following output.

Verifying license key...

Login succeeded.

Code-Sprache: YAML (yaml)- You will now be asked whether you would like to boot Connectware as a system-service. Without this, the application must be started by hand every time the host system starts. In this example we will press enter to confirm yes.

- The installer script will then print your chosen options and ask for confirmation that they are correct.

If you followed this example then you should see the following.

Please review and confirm the following Connectware configuration:

------------------------------------------------------------------

Connectware license key: [VALID]

Installation directory: /opt/connectware

Autostart as systemd service: true

Accept configuration? [Y/n]

Code-Sprache: YAML (yaml)- The Connectware container images will then pulled onto your system. After they are successfully pulled, the installer script will check for old container images of previous Connectware versions, which can safely be removed („pruned“) to clean up disk space. The installer will ask for confirmation before any container images are removed. In this example the question looks as follows.

-----------------------------------------

Removing old Docker images

-----------------------------------------

The following Docker images are from previous Connectware versions and can be removed:

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.cybus.io/cybus/admin-web-app *********** e561383a5 24 hours ago 21.5MB

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.cybus.io/cybus/auth-server *********** a65b7f32f 24 hours ago 165MB

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.cybus.io/cybus/broker *********** 80dd0fb24 24 hours ago 56.7MB

(...)

-----------------------------------------

Should the above docker images be removed from your local computer (pruned)? [Y/n]

Code-Sprache: YAML (yaml)- After confirming your choice, you can observe the result of the image removal operation. As the last step, the installer will print a message telling you Connectware has been started or which commands you need to enter to run or stop Connectware.

In our example you should be prompted with the following.

Successfully installed Connectware!

===================================

You can find the installation directory at /opt/connectware.

In order to stop type:

systemctl stop connectware

Code-Sprache: YAML (yaml)Running the Installation in Silent Mode

Running the installer with the -s or --silent flag will start the installation in an automated mode without the need of user interaction.

To use this way of deploying Connectware the license key has to be supplied using the --license-key flag.

This deploys Connectware in a minimal configuration to the default /opt/connectware directory without installing the systemd service.

You can further personalize your installation using the supported installation flags. To see a full list of options just run the installation script with the --help flag.

Updating from an Older Version

To perform an update of an existing Connectware installation is just as easy as installing a new one.

All you need to do is to obtain the new installer script by following the same steps as described in the chapters Prepare Installer Script.

To upgrade an existing installation just choose the same folder your Connectware is currently running in.

All your custom settings like license key or network settings will automatically be migrated.

If you are asked for your license key during the update, you might have specified a wrong installation directory. If this is the case, please cancel the update and ensure you choose the correct installation path.

Remember that if your existing Connectware used elevated privileges during installation to also run the update using sudo.

Updating in Silent Mode

The easiest way to update to a newer version is to run the update in silent mode. All you have to do is to start the installer script using the silent -s and directory -d flags.

$ ./connectware-online-installer.sh -s -d <PATH/TO/YOUR/CONNECTWARE/FOLDER>

Code-Sprache: YAML (yaml)Changing SSL Certificates

In case you need to update or change the certificate files (for example if you renewed them using certbot with Let’s Encrypt or want to add a self-signed certificate) you can do this by copying them to Connectware:

$ docker cp -L <path-to/your-key-file.key> <your-connectware-container>:/connectware_certs/cybus_server.key

$ docker cp -L <path-to/your-cert-file.crt> <your-connectware-container>:/connectware_certs/cybus_server.crtCode-Sprache: YAML (yaml)The name of your Connectware container depends on the directory it was installed to and is rendered as <your-connectware-directory>_connectware_1. By default Connectware is installed to /opt/connectware/ which results in the container name connectware_connectware_1.

Restart the Connectware proxy to apply the changed files.

$ docker restart <your-connectware-container>

Code-Sprache: YAML (yaml)Removing Connectware

Removing Connectware from your system is a manual process. Follow the steps below in your terminal to remove Connectware.

- Stop the running Connectware instance. If Connectware systemd service has been installed simply run the command

systemctl stop connectware. Otherwise change into your installation directory and manually stop the running instance withdocker-compose down. - Optional: Remove the systemd service. If the systemd service was deployed it has to be stopped and disabled before it can be removed:

$ systemctl stop connectware

$ systemctl disable connectware

$ rm /etc/systemd/system/connectware.service

Code-Sprache: YAML (yaml)- Remove the installation directory. Now that Connectware has been stopped you can remove all local files contained in your installation directory.

$ rm -rf /opt/connectware

Code-Sprache: YAML (yaml)- Clean docker artifacts. The final step is to remove all remaining docker artifacts that are still on your system.

List all images, containers, volumes and networks:

$ docker images -a

$ docker container ls -a

$ docker volume ls

$ docker network ls

Code-Sprache: YAML (yaml)To remove a specific container:

$ docker container rm <CONTAINER-NAME>

Code-Sprache: YAML (yaml)Remove a specific image:

$ docker image rm <IMAGE-NAME>

Code-Sprache: YAML (yaml)For removing a specific volume:

$ docker volume rm <VOLUME-NAME>Remove a specific network:

$ docker network rm <NETWORK-NAME>

Code-Sprache: YAML (yaml)If you have no docker applications other then Connectware running on your system you can also simply remove all artifacts by running

$ docker system prune -a -f

Code-Sprache: YAML (yaml)Please keep in mind that this will remove all currently unused docker resources not only those created by Connectware.

Using Connectware

After following all the steps above, Connectware is now installed. You can access the Admin UI by opening your browser and entering the host’s IP address directly: https://<HOST-IP>.

The initial login credentials are

Username: admin

Password: admin

If you would like to learn more how to use Connectware, check out our docs at https://docs.cybus.io/ or see more guides here.

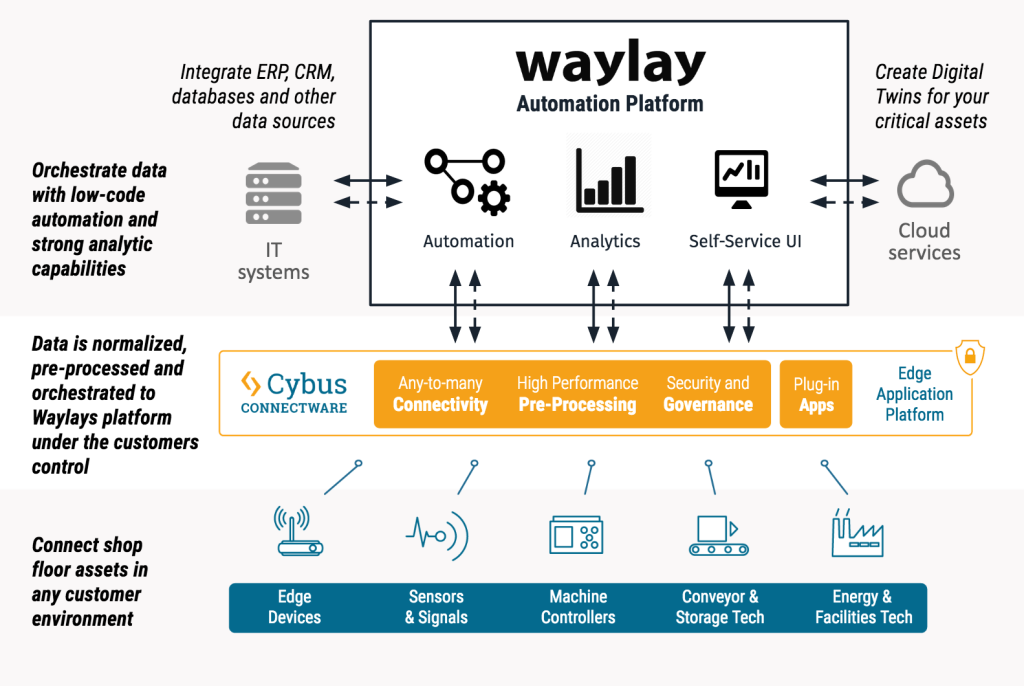

Combining the Waylay Automation Platform with Cybus Connectware

Introduction

With the combined solution of Cybus Connectware and the Waylay Automation Platform, business users and IT professionals can build their own smart industrial use cases.

The following video demonstrates, how three use cases can be realized with both, the Cybus Connectware and the Waylay Auomation Platform in combination.

What You Learn in this Video

The demonstration video delivers not only insights into both platforms and their user interface, but also delivers a step-by-step guide for three use cases. It is shown how to create:

1. Transparency in customer’s shop floor

2. Prediction of critical coolant level

3. Realization of a service level agreement based on machine data

For these use cases, the Cybus Connectware enables a connection of two milling machines from different generations (Modbus TCP and OPC UA) to extract real-time data. Temperature as well as coolant liquid level will be scanned. With the Connectware, the data is normalized and a common information model is created.

To provide the data to Waylay’s Automation Platform, it is made available in MQTT.

The Waylay Automation Platform then visualizes the machine data by offering a user-friendly dashboard. The demo video also shows how to create a business logic and the workflow needed for the three use cases with the Waylay Automation Platform.

Live Demo of Cybus Connectware

We know how unique the use cases and technical infrastructure of each company are. We therefore invite you for a live demo that picks up your current situation or your future goals. Get your live demo to find out what Cybus Connectware adds to your company:

Prerequisites

This lesson assumes that you want to set up an OPC Unified Architecture (a.k.a OPC UA) server as an integrated Connectware resource which other clients can connect to. To understand the basic concepts of the Connectware, please take a look at the Technical Overview lesson.

To follow along with the example, you will need a running instance of the Connectware 1.0.18 or later. In case you do not have that, learn How to install the Connectware.

In this article we will create a Connectware service which configures and enables the OPC UA server. If you are new to services and creating commissioning files, read our article about Service Basics. If you would like to set up the Connectware as an OPC UA client, please view the article How to Connect to an OPC UA Server.

Introduction

This article will teach you how to use the Connectware OPC UA server resource in your system setup. In more detail, the following topics are covered:

- Including the server definition in your commissioning file

- Defining nodes and mappings

- Starting up the OPC UA server

- Using an external OPC UA client to connect to the server

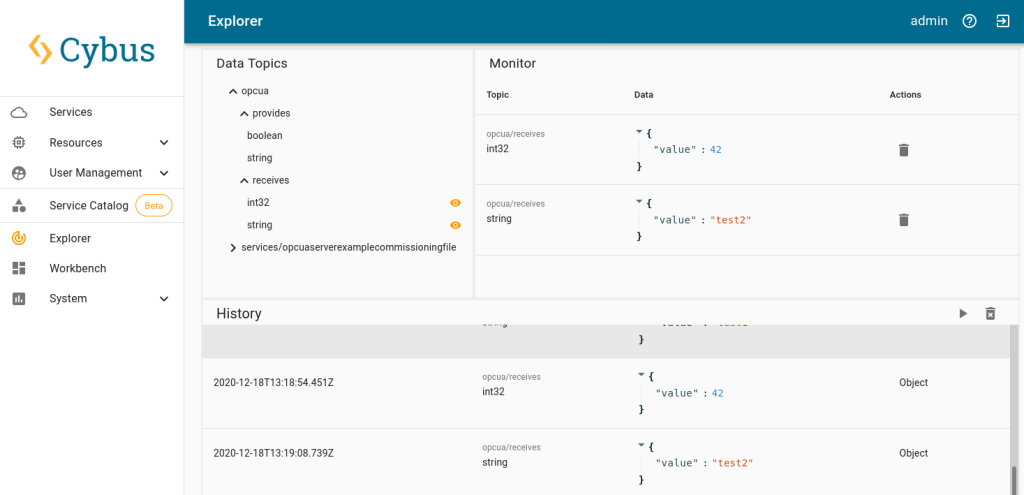

Service Commissioning File Example

You can download the service commissioning file that we use in this example from the Cybus GitHub repository.

Selecting the Tools

We will use Prosys OPC UA Client for testing in this guide. However, it is up to you to decide which tool to work with. You can use FreeOpcUa’s Simple OPC UA GUI client, which is open source and available for all major OS’s. If you feel more comfortable working on the terminal, go for Etienne Rossignon’s opcua-commander. In case you prefer online tools, try One-Way Automation’s OPC UA Web Client. It is free to use, but you will be asked to sign up first and you will not be able to connect to servers on your local network.

About the Connectware OPC UA Server

Since the release of version 1.0.18, Connectware supports a new type of resource that can be utilized in services: The server resource enables services to run servers within Connectware and the first protocol supported by this resource is OPC UA. Thus, you can set up an OPC UA server, which can be used to receive data from or provide data to devices or applications mainly in industrial environments. Being fully integrated into Connectware, this feature allows to reduce the overheads in selecting, deploying, maintaining and integrating a separate software for fulfilling this demand in your system.

The OPC UA server is internally connected to the Connectwares protocol mapper, which means that you can map your data from any other protocol supported by the Connectware directly on data nodes of the OPC UA server. In the service commissioning file, OPC UA server nodes can be handled just as any other endpoint. Therefore, you can use them in mappings as usual by simply defining the data source and target.

Writing the Commissioning File

The Commissioning File contains all resource definitions and is read by Connectware. To understand the file’s anatomy in detail, please consult our Reference docs.

Start by opening a text editor and creating a new file, e.g. opcua-server-example-commissioning-file.yml. The commissioning file is in the YAML format, perfectly readable for both humans and machines! We will now go through the process of defining the required sections for this example.

Description and Metadata

These sections contain more general information about the commissioning file. You can give a short description and add a stack of metadata. As for metadata, only the name is required, while the rest is optional. We will use the following set of information for this lesson:

description: >

OPC UA Server Example Commissioning File

Cybus Learn - How to set up the integrated Connectware OPC UA server

https://www.cybus.io/learn/how-to-set-up-the-integrated-connectware-opc-ua-server/

metadata:

name: OPC UA Server Example Commissioning File

version: 1.0.0

icon: https://www.cybus.io/wp-content/uploads/2019/03/Cybus-logo-Claim-lang.svg

provider: cybus

homepage: https://www.cybus.io

Code-Sprache: YAML (yaml)Resources